What Makes an Open IoT Developer Challenge Winner!

MoDeS3: Promotional video

Statecharts are everywhere! #6 – Verification example

As we mentioned in a former post, Bence, who is our colleague, has developed a model transformation plugin for YAKINDU as well. The plugin transforms a chosen SCT model into timed automata, and automatically generates CTL expressions for formal verification.

Let’s have a look at its theoretical background and a practical example.

Theoretical approach

The workflow of the transformation is depicted above. First, the YAKINDU Statecharts to UPPAAL Transformation Engine parses the Statechart design model (the SCT file), and looks for different mathces of structural graph patterns in it.

The patterns were composed in the Query Language of EMF-IncQuery. For composing these patterns the metamodel of the YAKINDU Statecharts were used, because the statcharts in the SCT model are the instances of that metamodel.

After that, the Transformation Engine transforms the given statecharts to timed automata acceptable by UPPAAL. In order to achieve it, we used the metamodel of UPPAAL. The metamodel is publicly available and was designed at the University of Padernborn. The transformation code was written in Xtend and it extensively uses the EMF Reflective API.

Finally, the Transformation Engine creates UPPAAL automata that adhere to the statecharts, and generates CTL expressions to make model checking automatic. You can find details on model checking in a former blogpost.

Practical approach

Now let us show you in an example what our YAKINDU Statecharts to UPPAAL Transformation Engine is capable of. First of all, we need a Yakindu model that we want to transform. We have made a simple timer statemachine just for this demonstration.

As you can see, the model consists of two states. In Idle state the system does not do anything. In Running state the system measures time. Also, there are two events that can be used for controlling the system. Finally, there is an integer variable that symbolizes elapsed time.

Let us transform this statemachine to UPPAAL. This is what we get:

Control template:

As you can see, the transformer creates two templates. The first one is the UPPAAL equivalent of the YAKINDU statechart. The second one is a control template that is used for firing events.

Let us see what transformation rules the transformer uses:

- Events are transformed to synchnronization channels

- Integer variables are transformed to int variables

- Vertices are transformed to locations

- Transitions are transformed to edges

- Updates of transitions are transformed to updates on edges

- Time events (after .. s) are transformed to clock variables, location invariants and guards on outgoing edges

So what can we use this UPPAAL model for? First, you can run simulations on it. With the control template you can fire events, so transitions and actions can take place in the primary process. You can see the actual active locations of templates, you can follow changes in values of variables etc. Here is a picture of a simulation on the timer model:

Second, queries can be created for checking certain properties of our models. Let us say you want to know whether your statemachine is able to reach a certain state, or a value of a variable can be negative. You just have to create a query that describes the condition and the Verifier will tell you whether it is possible or not in your model. If it is possible, even a simulation trace is created that shows you the transitions taking your model into the certain state. Let us show you some queries:

The first query checks whether there can be a deadlock in the system. Fortunately, the model has been designed well, so deadlocks cannot take place.

The second and third queries check whether Running or Idle states can be reached. We create states because we want to reach them. If a state is unreachable, then something is wrong with the model.

The fourth query checks whether time can be measured for longer than 9 seconds. We created this model to measure time, and according to the Verifier we can do so for at least 10 seconds.

The fifth query checks whether the elapsed time can be a negative value. If it was possible, then something would be very wrong with the model. Fortunately, this is not the situation now.

Summaries of the MoDeS3 projects

In the last couple of weeks we have finished the open IoT Challenge submissions. One showed a control system for a Lego robot arm which executes missions. The control logic was developed with model-based development tools, computer vision observes the environment, simulation is used at design and also runtime to analyse the behaviours. Various open-source technologies were used to ensure the interoperability of the system. The full summary is available: http://modes3.tumblr.com/post/140068568675/modes3-lego-robot-the-summary

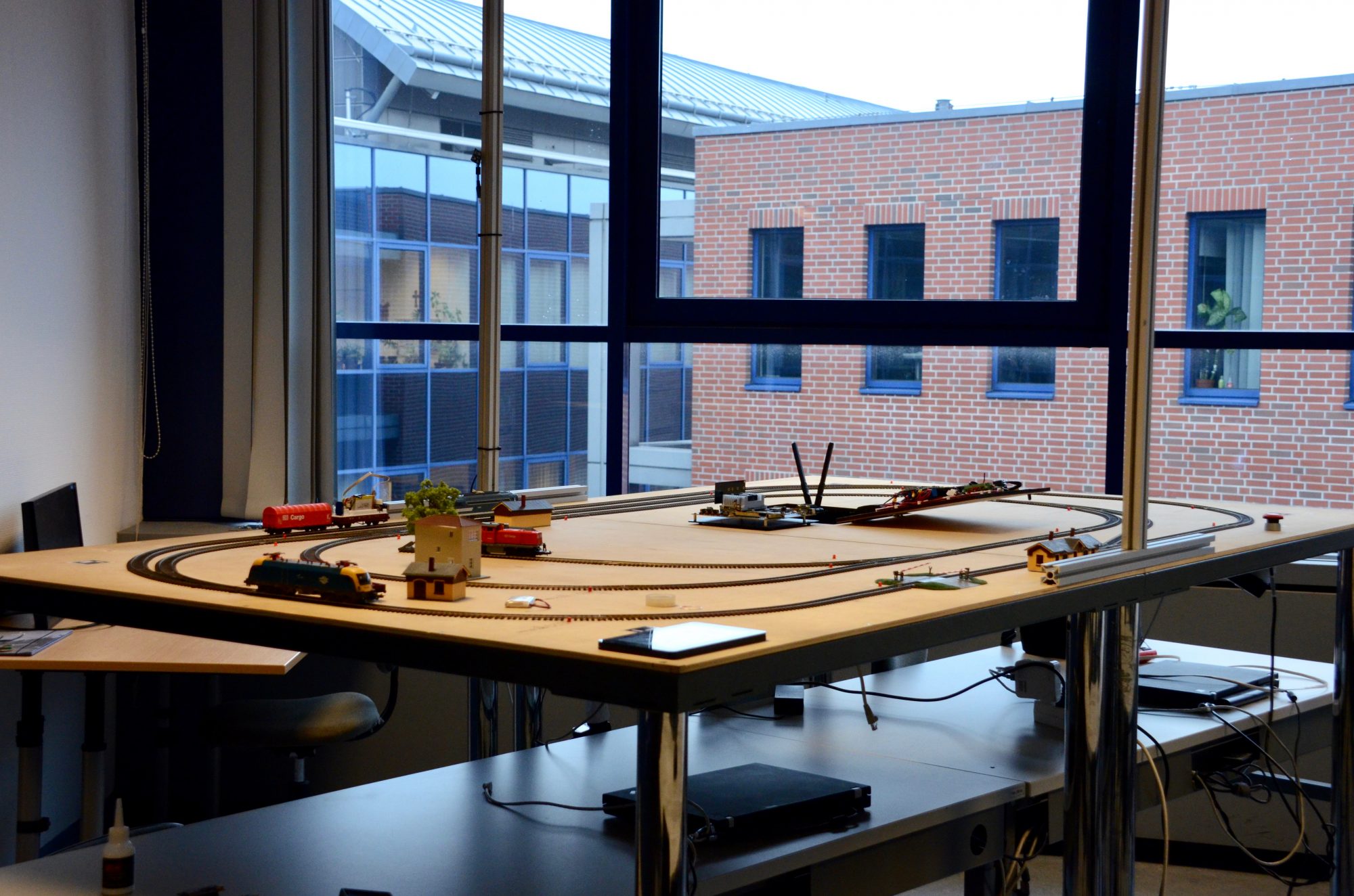

The second submission showed the development of a mixed-critical system, where various technologies were used to ensure the accident free behaviour of a distributed railway system. Beside the many open-source technologies we employed, also various verification techniques were used in the project, namely testing, runtime verification and model checking. For further details, please read our post: http://modes3.tumblr.com/post/140068608695/modes3-railway-system-summary-of-the-project

Note that in conclusion, the Lego robot arm is placed on the table of the railway system, so the whole system is built on top of two sub-systems executing different missions.

All the developments are detailed in our blog! For further questions, do not hesitate to contact us!

The bigger picture

Now, that the Open IoT Challenge is over, we have some spare time, therefore I try to explain for you how the hardware works and how was made. It won’t be a very long post, you shouldn’t read long paragraphs about electrons or voltage, but I will insert a tons of pictures into it, I should insert a read more link here. But trust me: it is worth watching them!

Superbeagles are on the road!

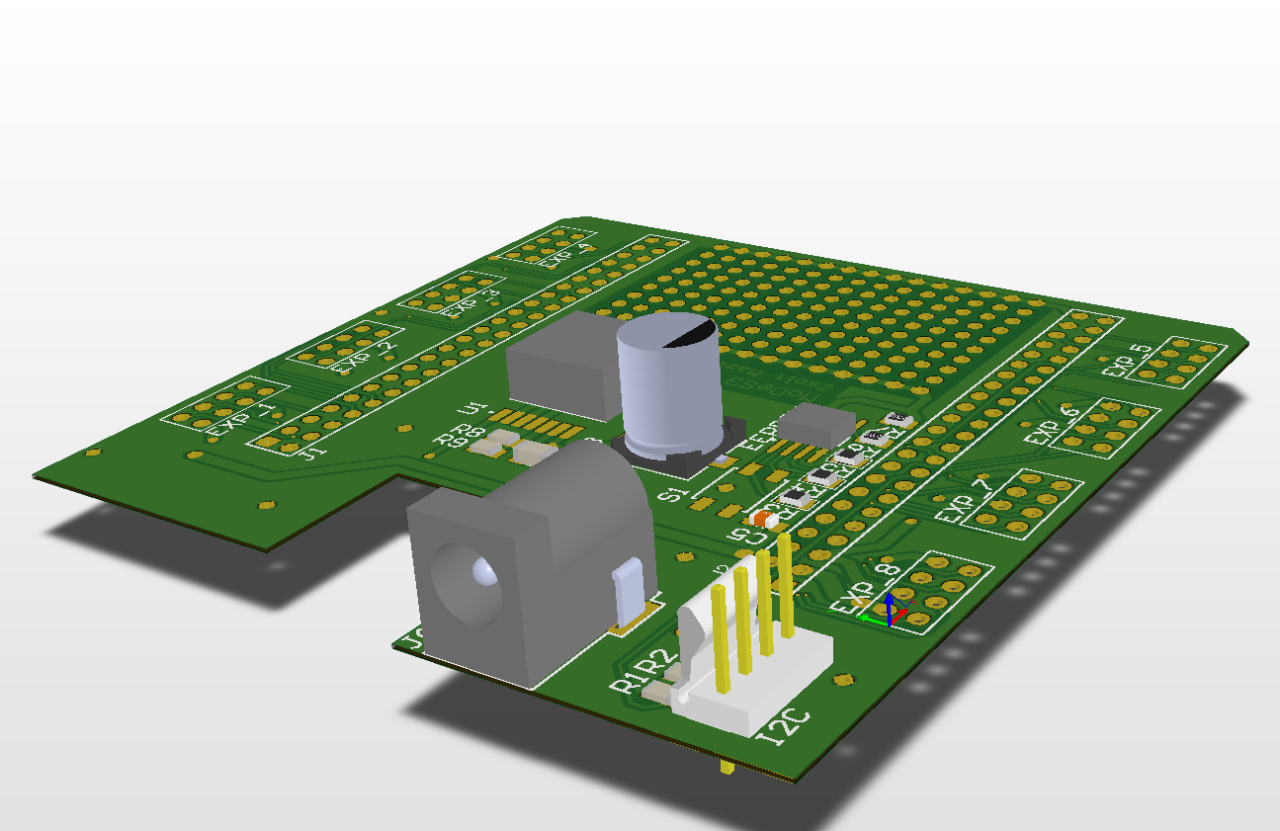

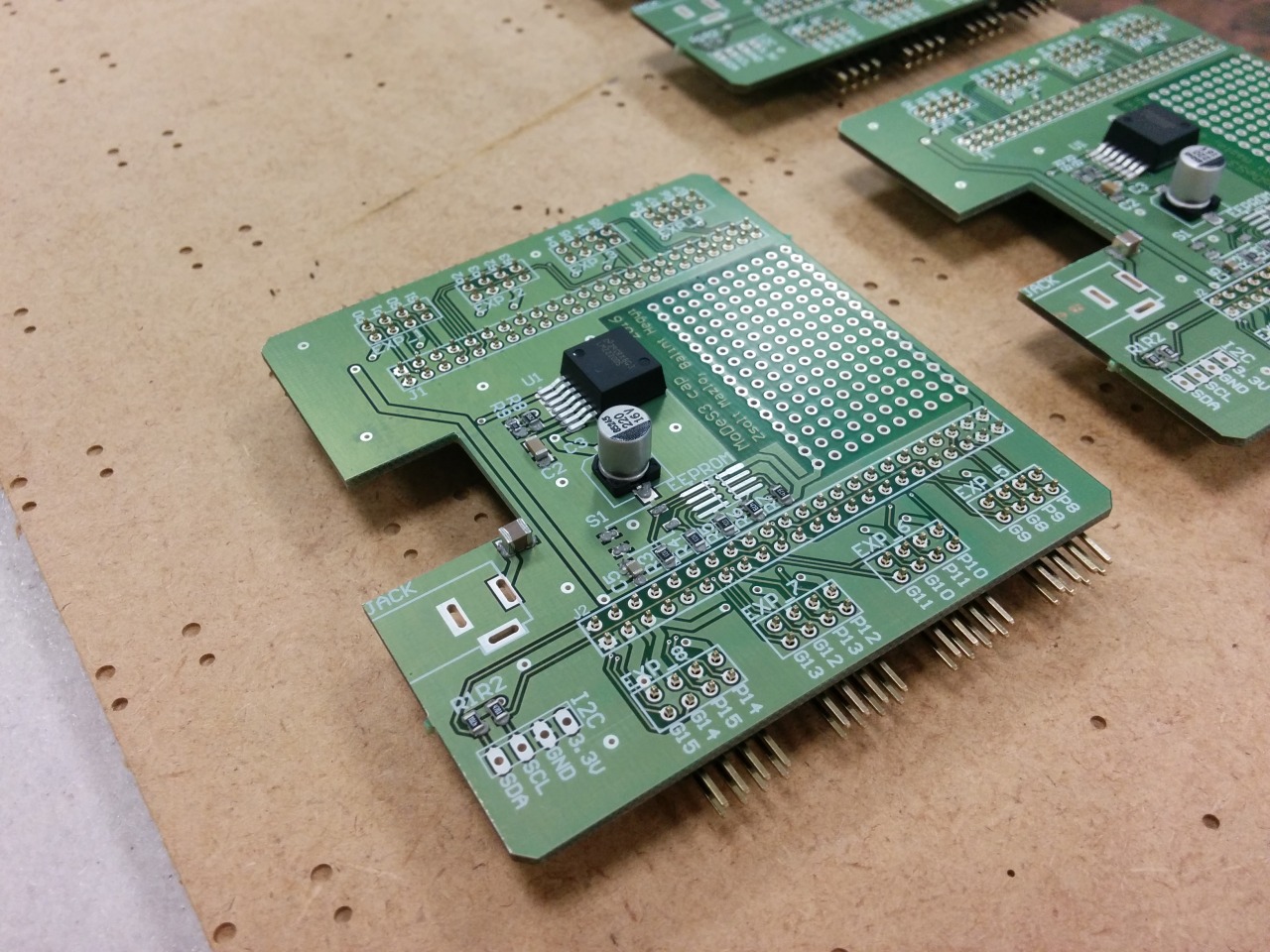

Previously one of my colleague, Bálint already wrote about why the Beaglebone Black board is so amazing, but in an other post I mentioned our little problem with the hardware – so I did managed to make a Cap for the beagle to make it Superman Superbeagle.

The design:

And the reality:

With this Cap, the Beaglebones could accept any power source between 7V and 20V, we have 8 pin headers to attach expander panels… oh wait, did I mentioned that we designed expanders also? No? My bad.

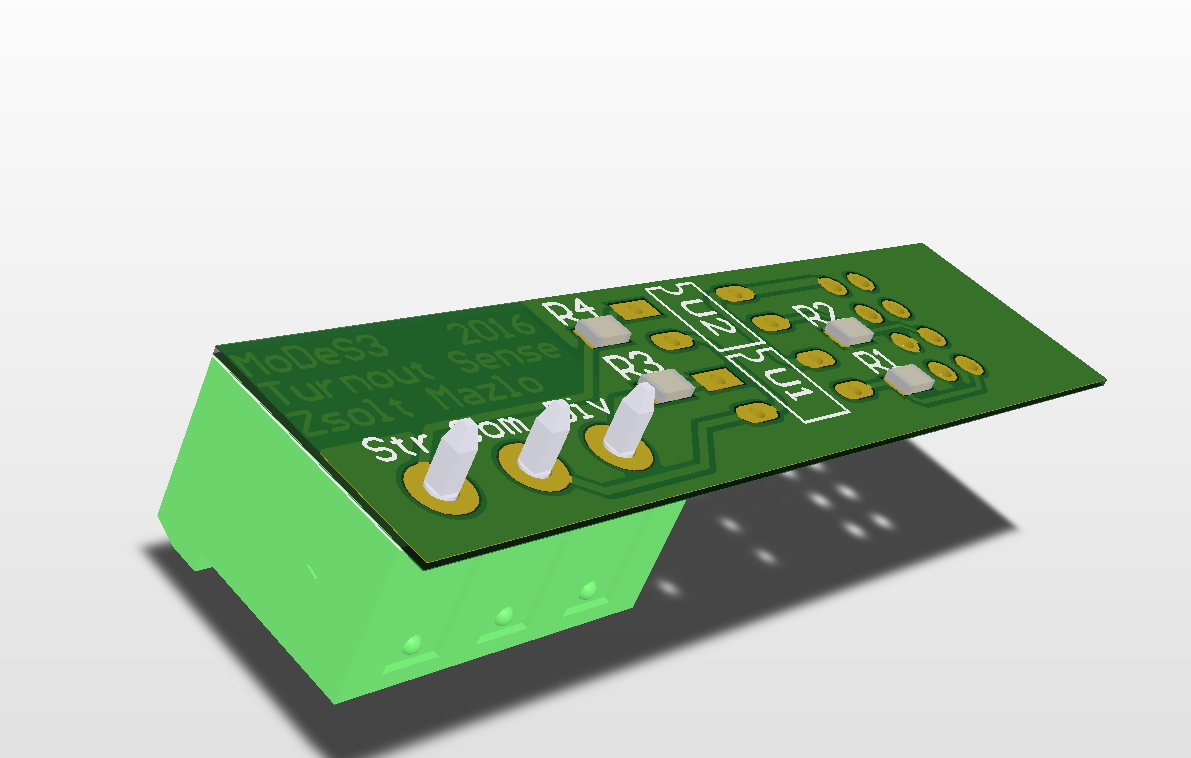

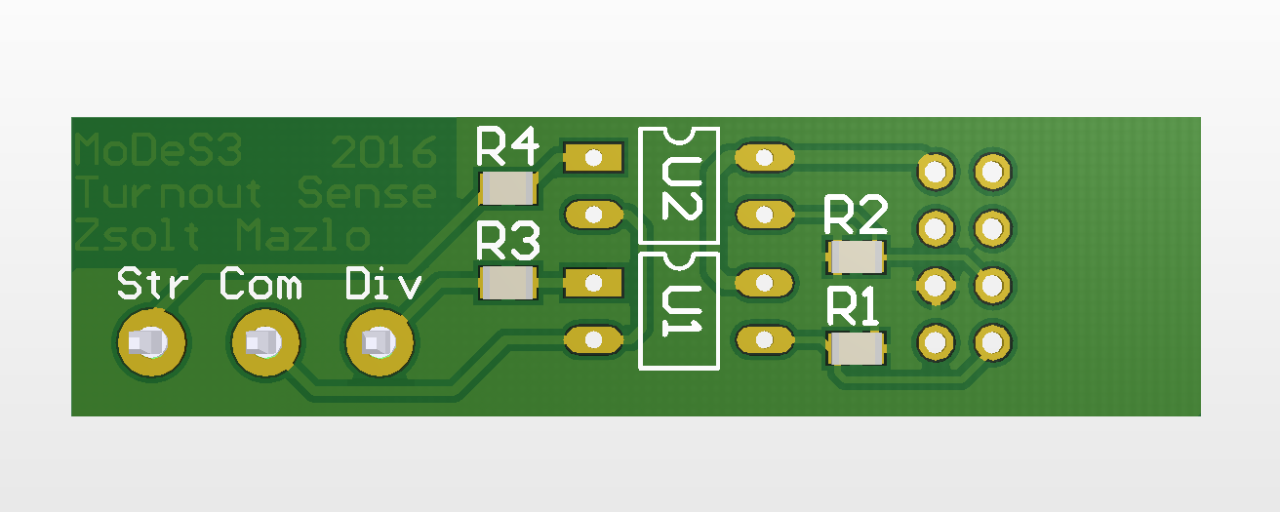

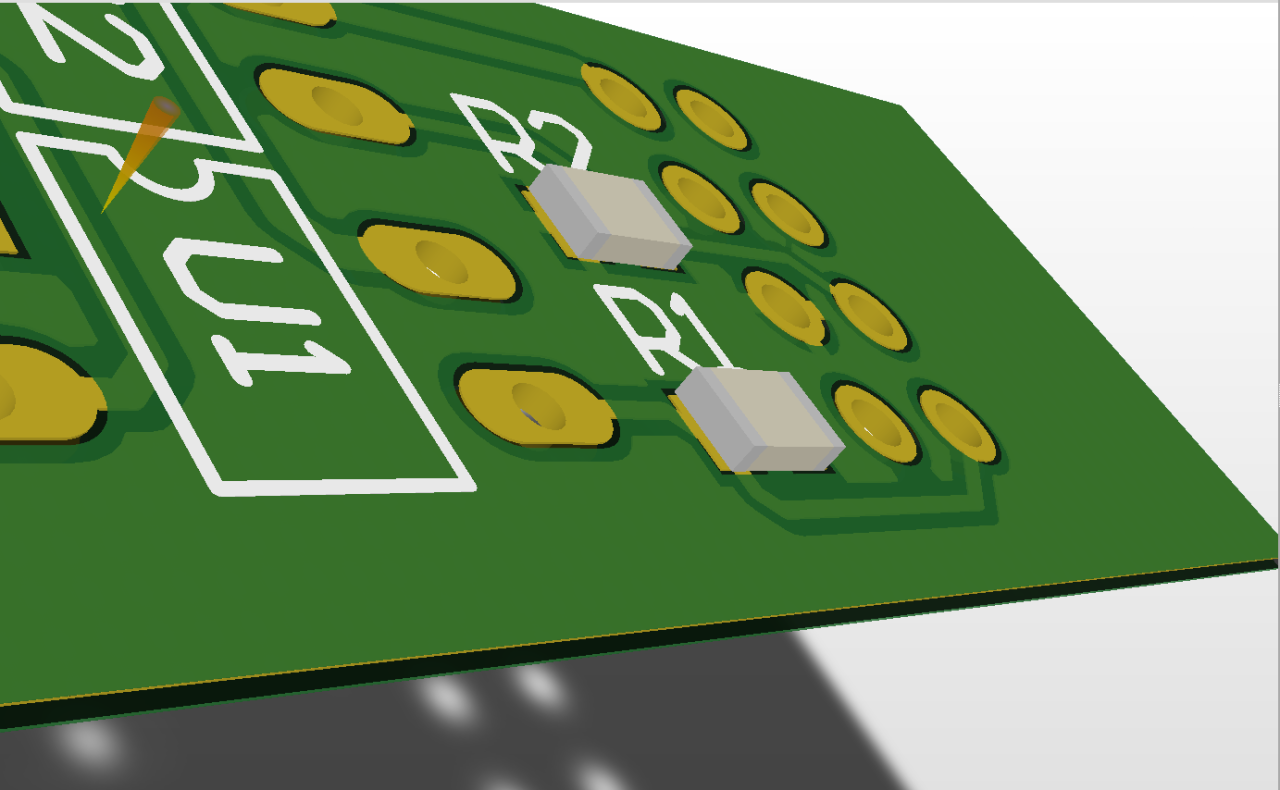

Not a rinse commercial: turnout sensation

One of the many responsibility of the hardware is that it need to sense somehow the status of the turnouts. These informations are highly important: some cases, in which trains are moving towards each other, they could collide, so we should stop them; but if the turnout is in the right position, then we could pass one of them through the turnout and even avoid the collision. With this knowledge, we could make the movement of the trains continuously beside the safe operation.

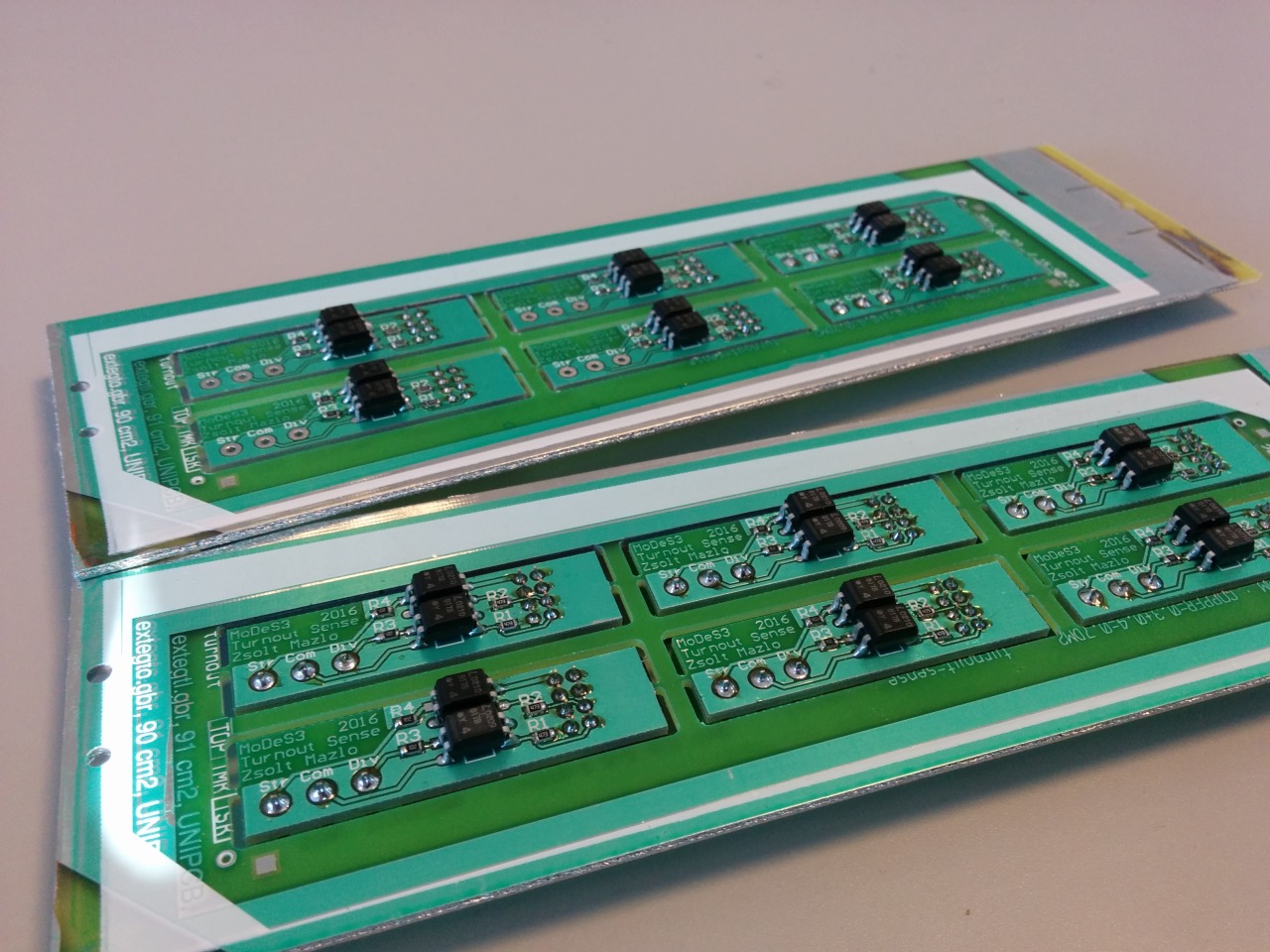

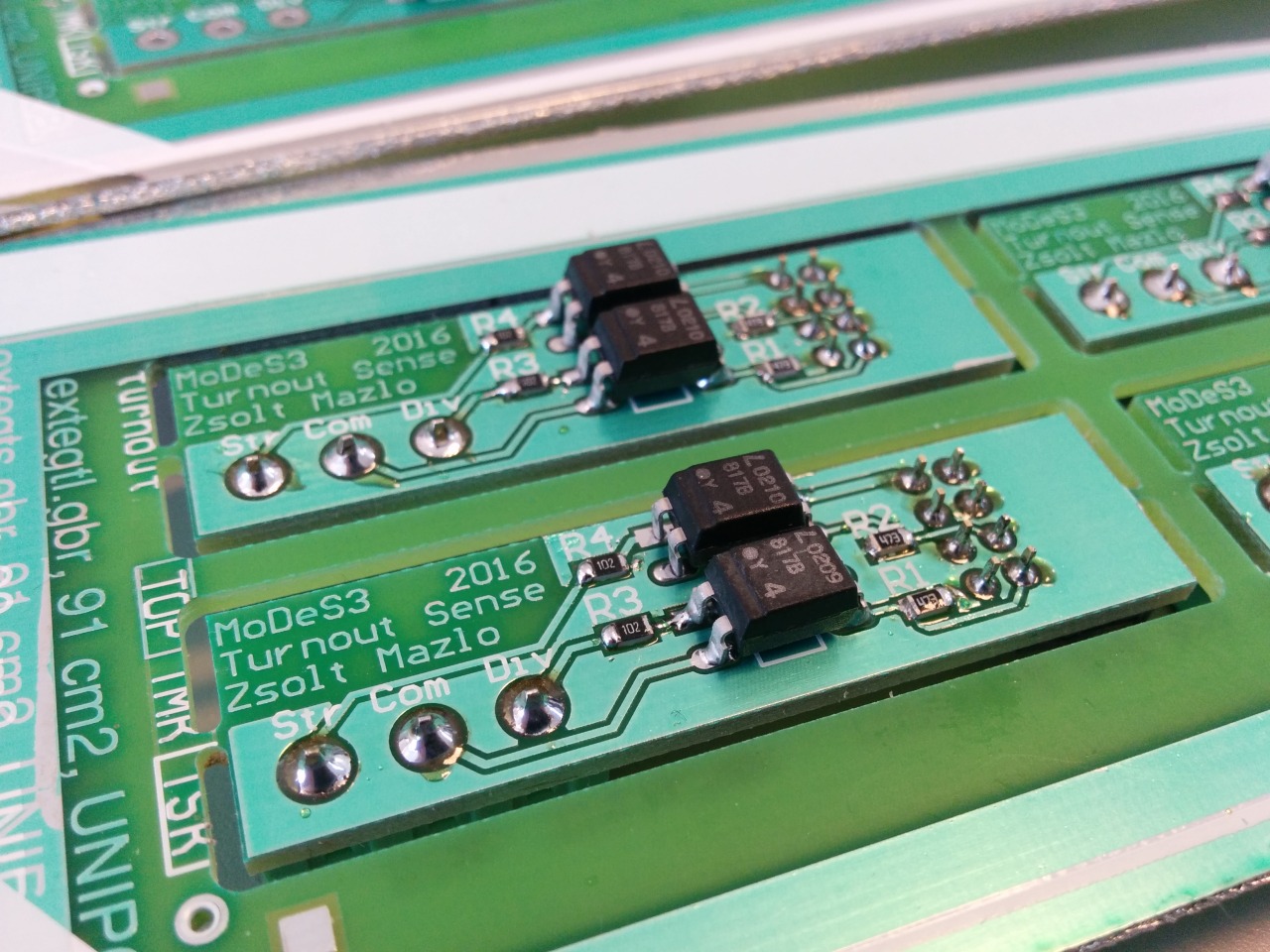

Therefore we made some measurements about how the turnout actuators are work and after that we created a PCB to handle this.

Here is the design:

And the reality:

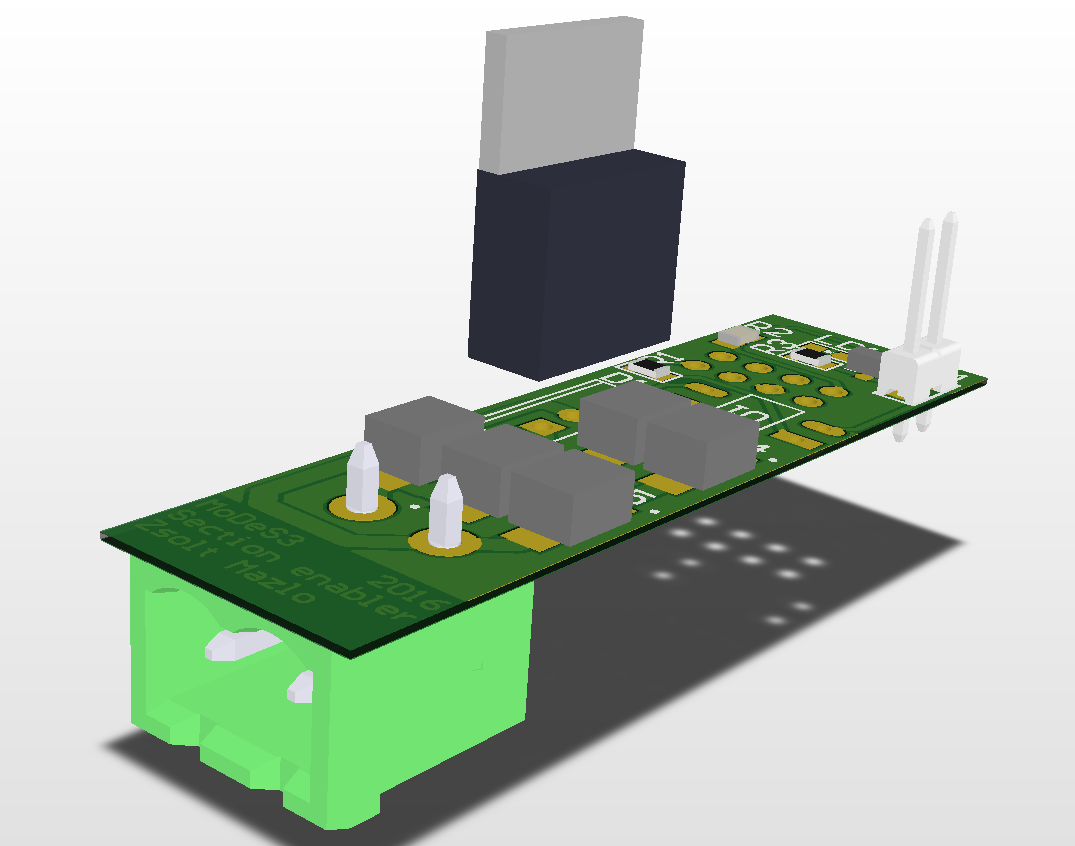

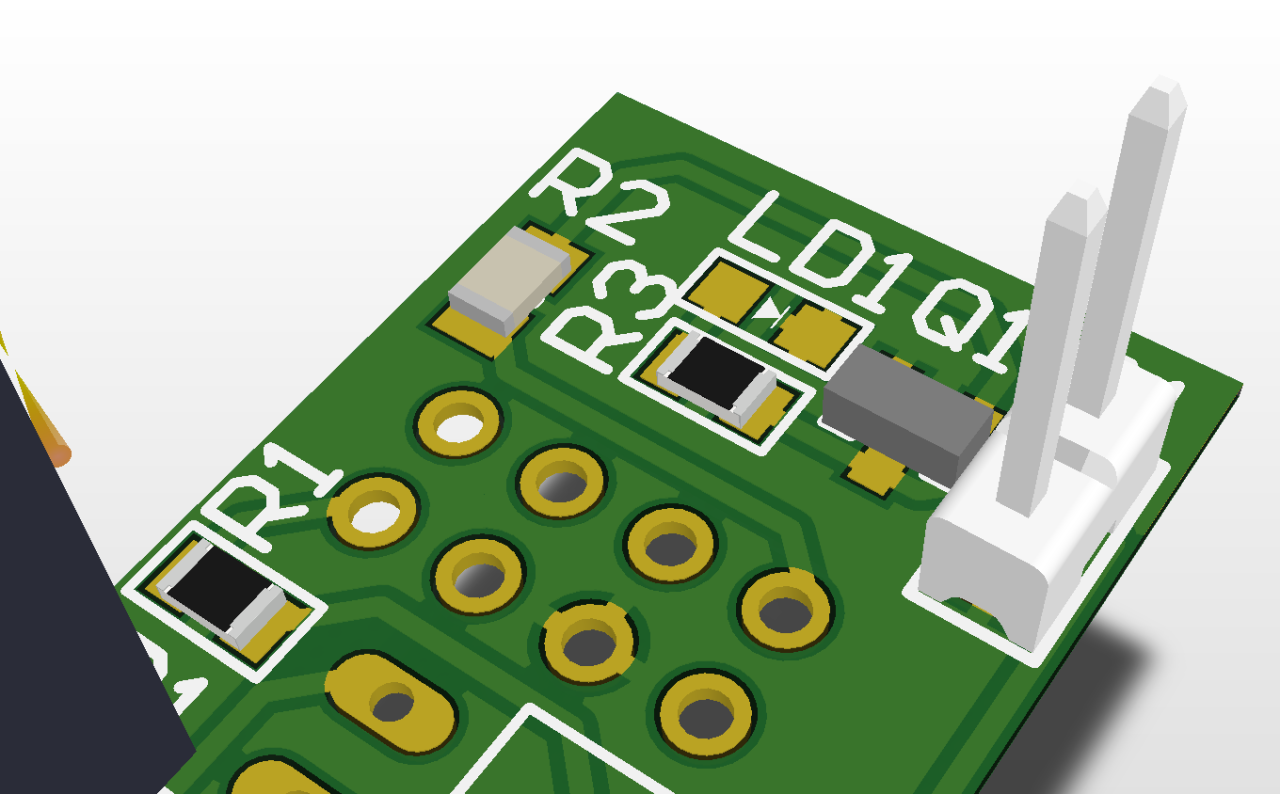

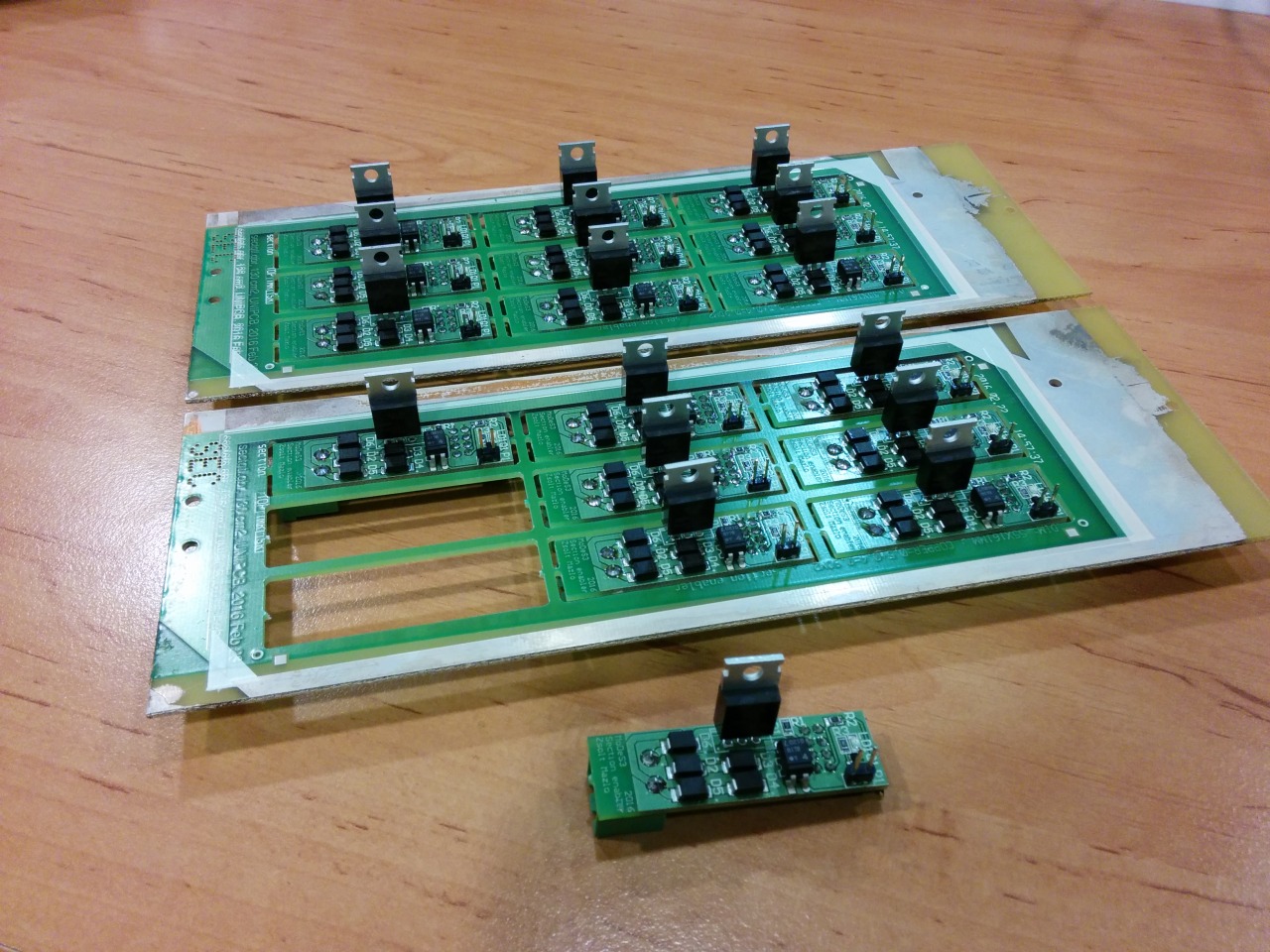

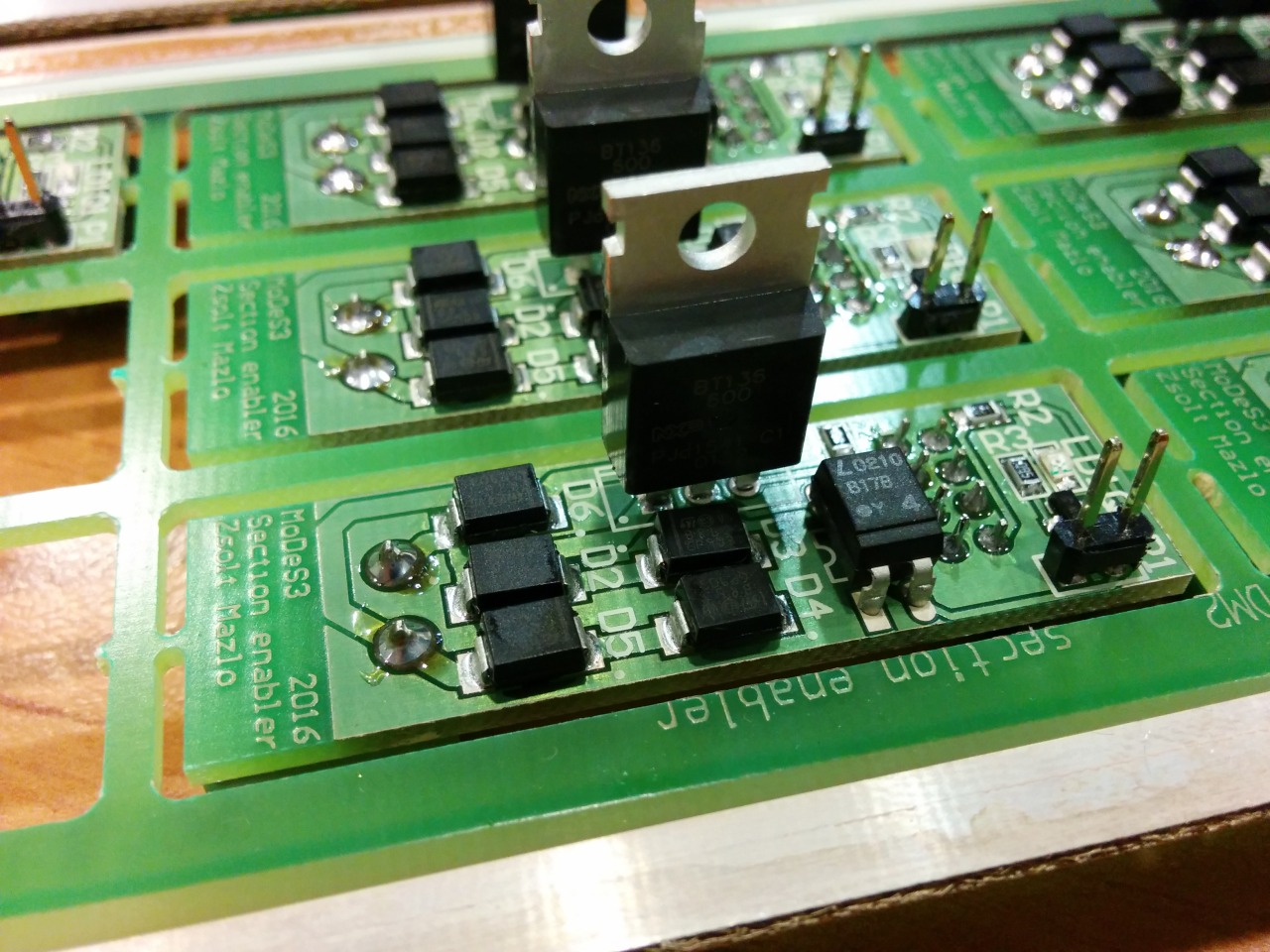

Section enablers

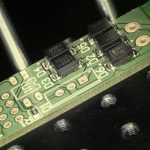

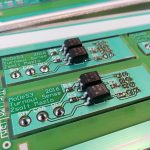

Okay, let assume that we are getting every information about the track that we need: the location of the trains, the turnouts’ status, and so on, and one time, we need to stop one train: but how, you may ask. That’s the reason why we designed and assembled the section enabler or disabler PCBs: in the near past I wrote about the mechanism.

Design:

Reality:

MoDeS3: Lego robot video

Finally the trains were stopped!

MoDeS3 – Railway system: Summary of the project

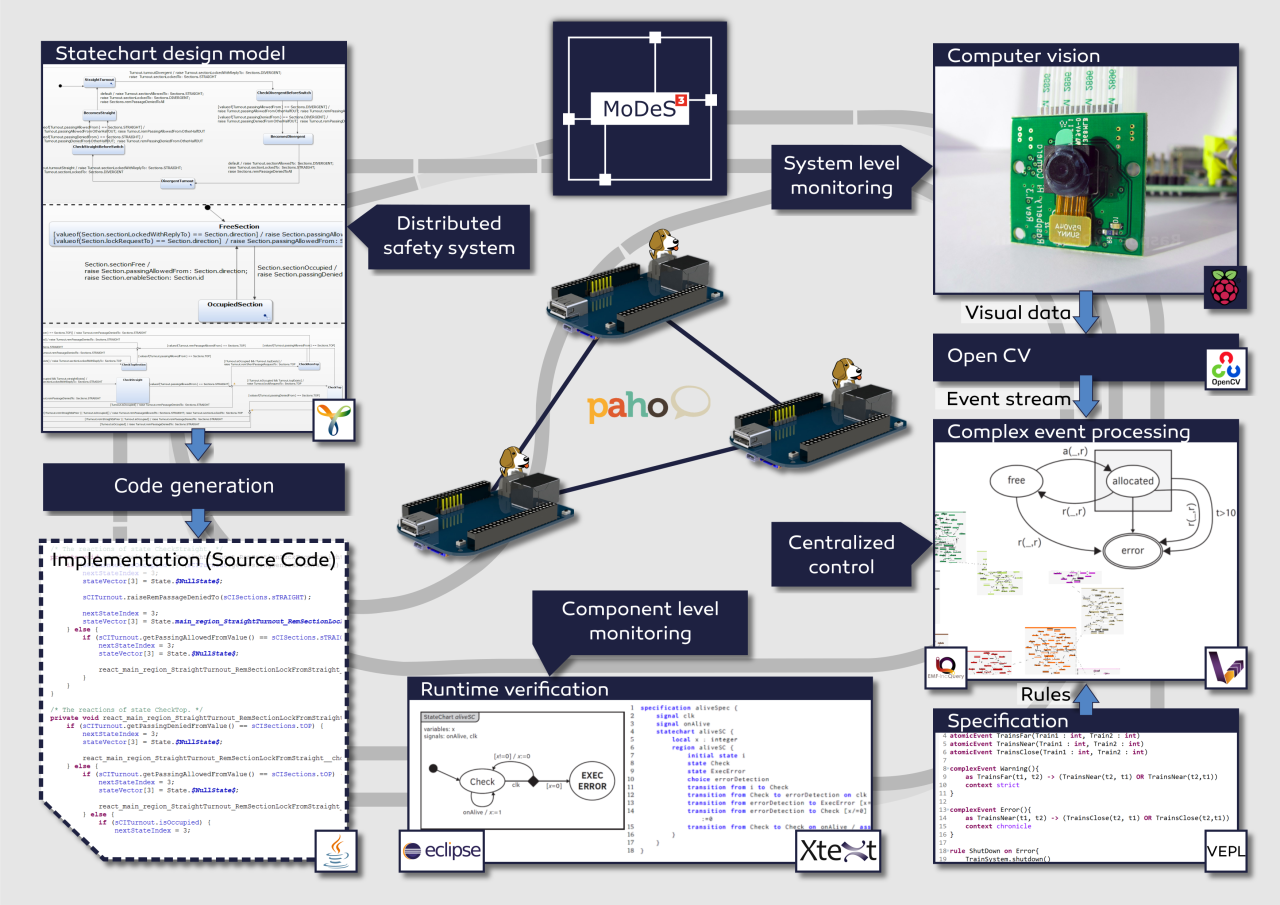

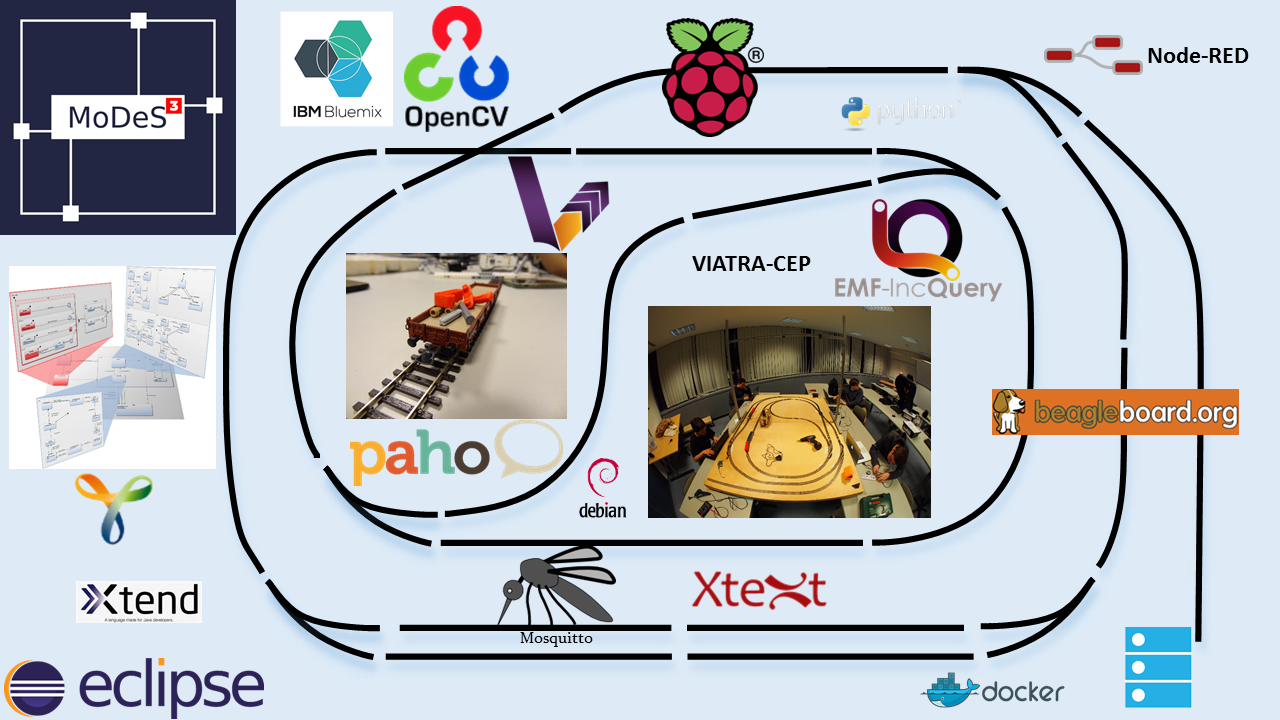

In the last two months we have invested much time to demonstrate model-based development with the help of a mixed-critical model railway system. We wanted to show the newest techniques that can be used to develop highly reliable safety critical systems.

We started from an old hardware architecture, but for this project we have completely redesigned the hardware architecture to meet the new requirements of the envisioned system – we wanted to demonstrate various development, verification, and validation techniques, integrate them, and use a real-time open-source operating system on the controllers. After the architectural plans were made, we’ve decided to use BeagleBone Blacks that’ll run a special Debian based Linux (with a real-time kernel developed by Texas Instruments).

The development of safety critical systems starts by clarifying the requirements – in this case our goal is avoiding crashes and accidents. Then comes the more difficult part: model-based development of the distributed logic. This allows verification and code generation based on the model, resulting in a more reliable system. This was conducted in the open-source Yakindu Statecharts Tool. Validation and verification rules were utilized to find design problems at the early stages of development. The validation rules were defined with the help of the open-source IncQuery engine (which is used for graph-queries), while the transformation rules were implemented in the VIATRA framework. The source code of the distributed logic was generated automatically from the design models.

MQTT is used as a communication platform, Mosquitto and Paho provided the technology to implement the communication infrastructure of the system. These IoT technologies proved their applicability in time critical systems. Our experience was that they are not only easy to use, but these technologies provide realiability and the performance needed for our project.

Conceptually, we had two different levels of monitoring. One level runs on the programmable real-time unit of the BeagleBone hardware, and another one runs at the system level.

We have developed a language to support the runtime verification of the local components. A special statechart language is provided based on Xtext from which we can generate embedded monitors.

On the top level, there is a computer vision based monitoring system. The video is processed real-time by OpenCV, which is an open-source library of computer vision techniques. An open-source comlex-event processing engine maintains an abstract view represented in a live model. IncQuery patterns are used to define the critical event in the system and VIATRA-CEP processes the event stream. Our developments will hopefully appear in the future in the official version of VIATRA-CEP.

During this challenge one of the main difficulties regarding the complex-event processing part was creating a program module which can be later integrated to an open-source project.

The not technology related complexity was to design and implement a mathematical formalism which is appropiate for using in Complex Event Processing Frameworks.

Using the open-source IoT technologies of Eclipse was challenging at first but since they are well-documented and intuitive to use they were simple to use after all. Integrating the complex-event processing engine to other open-source communication technologies was not difficult.

We have tested the system in the IBM Bluemix cloud in Docker containers and also on the real hardware. Node-RED flows and the generated statechart codes have been deployed into IBM Bluemix. Each turnout has its own statechart and they run separately, connected through the Node-RED flow, to make the communication easier and to use cutting-edge Internet of Things technolgy!

The high-level overview of the system is depicted on the next picture.

During the development we have faced theoretical challenges such as the new formalism for complex-event processing, the development of the distributed safety-logic or the verification of the components. On the other side, we have solved many technology related problems, integration tasks or more involved problems.

Besides, we have shot some videos as well: Model Railway Track Side Perspective, Model Railway Onboard Footage #1 and #2, Restlessly building the hardware. The videos are available at our blog.

Here is a picture for a quick overview of the technologies we used.

During the developments, we have also contributed to the open-source world! Besides, all modules we implemented are open source and available in our GitHub repository.

For more details, read our blog!