MoDeS3: Lego robot video

MoDeS3-LEGO Robot – The Summary

The goal of our Eclipse IoT challenge project is to develop a robot arm which can execute tasks. This involves the integration of multiple information sources into a framework. Simulation is used to design and runtime analysis of the system. Computer vision is responsible to recognize the objects to be moved and also detect dangerous situation. The controller is designed with the help of statechart models and automated code generation provided the implementation. Various open-source IoT technologies provided the communication and integration between the components.

The result is a complex IoT application with many communicating components ensuring the execution of tasks related to the robot domain. Note that in this phase our robot executes only simple tasks however this architecture is easy to be configured to execute more complex missions too.

From the theoretical side, the most involved tasks were the following:

- Design a controller with model-based techniques. Finally, a hierarchical solution with multiple-layers of abstraction was chosen. A simple mission is depicted here.

- Developing physical model in OpenModelica with such a precision that could be used to predict the behaviours of the robot

- Configure OpenCV to recognize the situations and minimize false alarms.

We have successfully implemented model-driven LEGO robot crane and summarize our research and engineering successes in the following points.

- We have developed and built a LEGO crane with multiple motors and sensors.

- We have developed custom python scripts to control the robot and prevent dangerous situations.

- We have developed and analysed the control logic as Yakindu statechart models to control the robot movements and specify complex and hierarchical tasks.

- We have implemented the communication infrastructure with MQTT protocol to communicate with remote components, sensors and the robot. We utilized Eclipse Paho and Mosquitto.

- Additionally, we have applied sensors and complex computer vision to detect terrain objects around the robot and guide it. We used OpenCV for this purposes and MQTT for the communication.

- We have simulated the behaviour of the motors and the physical environment with OpenModelica to continuously analyse and predict the future states of the robot. Note that this component was designed to support cloud deployment to exploit the huge computational power of cloud systems.

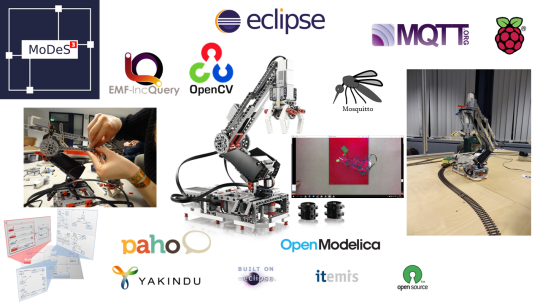

For an overview of related IoT technologies, see the picture below:

For a short demo, find the related post here!

For further details, please read our blog!

Model-driven development of the LEGO robot – An overview

Introduction

In the middle of the summer we found an EV3 robot arm, so we thought to start developing some model based safety critical control program for it.

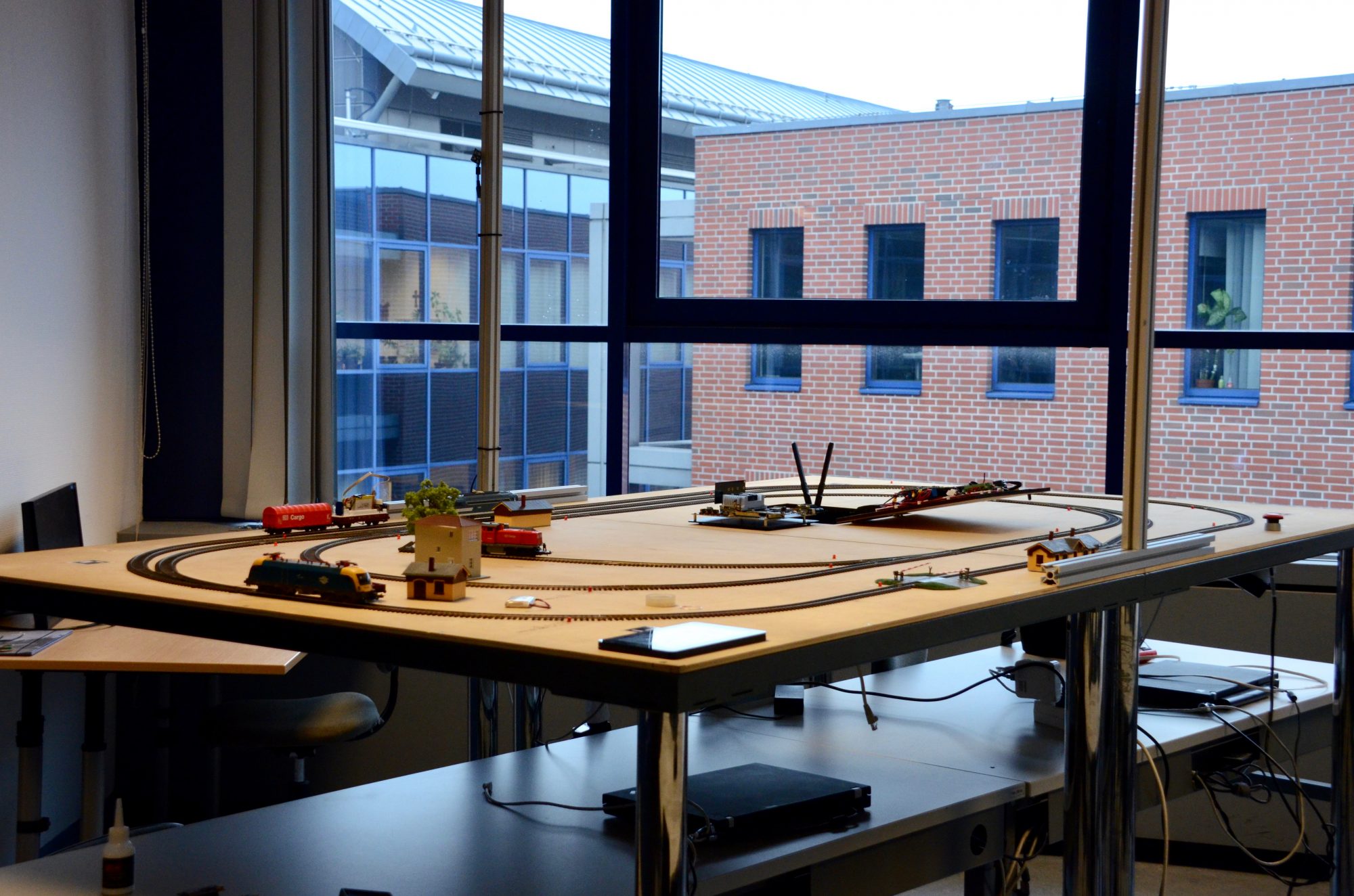

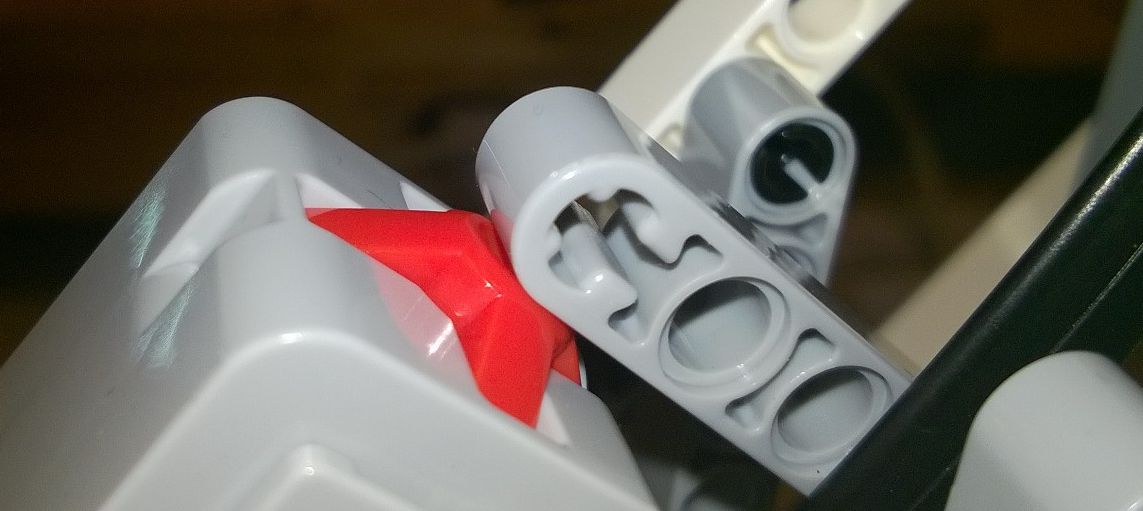

This robot had three motors and two sensors: one touch and one color sensor. It was built according to the step-by-step guide which you can find on this website. Later we have enhanced it by adding a new motor. With this new structure, as you can see in the picture below, we are able to rotate the gripper head.

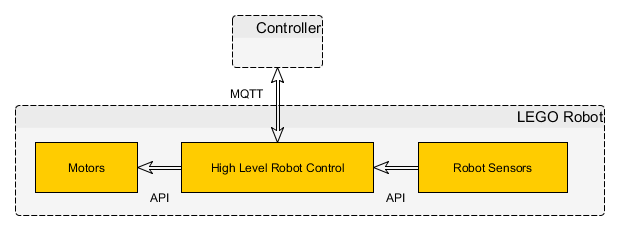

We have implemented the control for the LEGO crane (robot arm) on top of multiple communicating components. The basic architecture is depicted below:

As it can be seen there are multiple functions in the system: Yakindu is used to develop the control with the help of statecharts, communication uses MQTT, the slave runs on the robot on an embedded processor. Simulation is used to predict movements, OpenModelica is used for these purposes. Computer vision and OpenCV gathers the information from the environment and sends alerts to the controller. In addition, it provides information from the object to be moved.

LEGO Robot architecture

In the first component you can see the EV3 itself. We have implemented a python script which directly controls the robot motors and is able to access sensor information or detect if the motor is overdriven. Generally, the python script receives commands from the controller component and executes them, although in order to prevent motor overdriving it also have some local reflexes. For example if any of the touch sensors is pushed, it stops all the movements automatically. As you can see in the pictures below, there are two touch sensors, one indicates if the robot arm moves too high, and the other if it wants to turn right too much. Some more details are provided in this blog post.

Communication

As I have mentioned, the controller component sends the control commands. But how does it do it? Almost all of these units communicate through MQTT. This protocol provides high maintainability and reconfigurability, so it made relatively easy to add new features. Every unit has its own topic where they publish and they subscribe to the other’s topics. We’ve developed hierarchical topics to organize the messages. This robot component only communicates with the controller component, which has other connections to the ‘user’, ‘observation’ and ‘prediction’ units.

User Interface

So let’s talk about the other units! It is obvious that we wanted to have control over this little robot, so we developed a graphic user interface in order to control its movements.

As you can see there’s no button for moving the arm vertically or horizontally, these functions are controlled with the arrow keys. This ‘user unit’ sends the commands to the ‘controller unit’ which processes them, afterwards sends a message to the robot. The GUI also shows the messages we’ve sent, and which we’ve received.

It is also possible to switch automatic operation. Here comes the logic question: what can this robot operate automatically? To be honest we have also found some model railway next to this robot that summer. So we thought the EV3 could move the cargos of the trains automatically. Obviously more help is needed for it, more information about the environment. That is why you can also see a monitoring component in the architectural view.

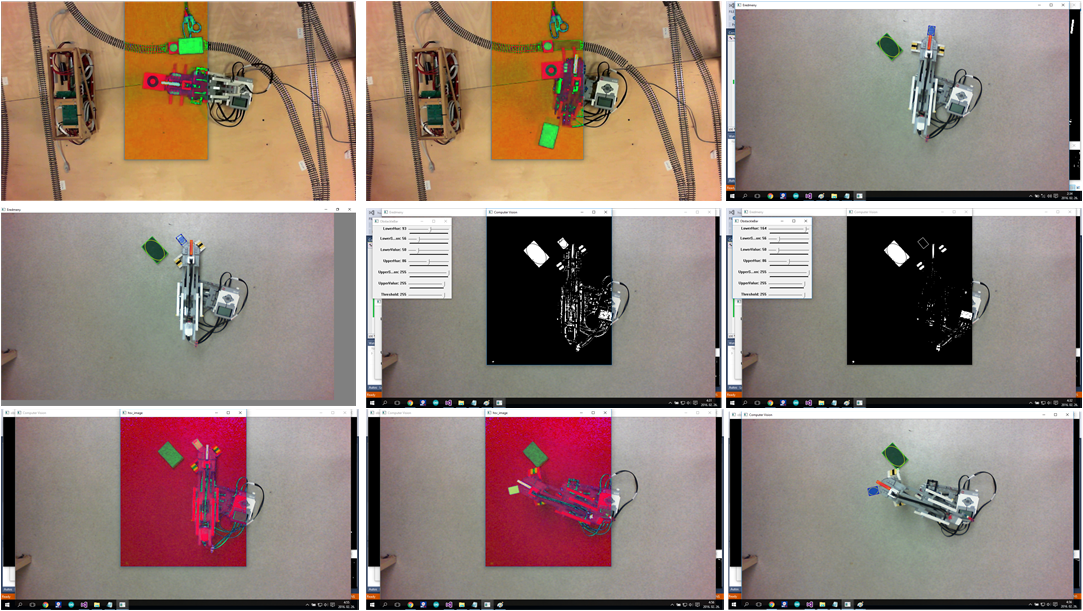

We observe the robot and its environment with a camera so we can analyse the situation in real-time using OpenCV3. This computer vision application sends messages to the controller. It warns the robot if an obstacle comes to the way. It notifies the controller if a train arrived to the desired place, this message also contains information about the orientation of the cargo. We can also ask for other information from it, for example the orientation of the gripper head, we use this function in the initialization of the robot’s motors.

For more details there is a more detailed blog post.

Controller

It’s time to see what the controller component does. This unit is responsible for the logic of the operation. We used the open source Yakindu Statechart Tools toolkit to model the control scenarios. The safety critical parts are implemented at first by state machines and then these design models are used for code generation: the produced source code can be deployed and integrated with MQTT to be able to communicate with the controlled objects. The controller also receives information from the computer vision parts and reacts to the events.

Yakindu statecharts has some useful features: the simulation of state machine models allows the dynamic semantics to be checked. Active states are directly highlighted in the statechart editor. In addition, Yakindu have many built in features for example validation rules which turned out to be very useful during the development. We also used the validation rules introduced in a former post to further increase the quality of the models, various analysis runs were conducted to check the design models!

Two kinds of composition rules were applied during the development of the control models. Yakindu tool provides built in hierarchy which we exploited in the design. We also decomposed the problem into two parts: one statechart provides the abstraction of the physical world and another statechart is responsible for the real control. This decomposition significantly increase the reusability of the control logic: for other robots with similar missions it would be enough to implement a new abstraction layer and the control logic could be reused!

Prediction

To compare the desired and the actual behavior of the motors we’ve used OpenModelica, an open-source simulation environment. The purpose of utilizing such a complex modelling tool was twofold: 1) design time analysis can help setting the parameters of the controllers. Estimations helped choosing the proper parameters for the controllers. 2) Runtime prediction can be built on top of the modelica models. Asking information about the limits of the robot in certain situations turned out to be helpful. Especially in those situations could we benefit from the simulation results, when the mission reaches the limit in a direction where no sensor information is available. In those cases simulation can predict the rotation value which is still safe for the system.

For more information read this post!

Validation & Verification

The controller of the robot arm was developed with the help of Yakindu statecharts. Validation rules provided by the tool and also by ourselves helped to find problems early in design. Verification was also done by transforming the design model into a formal representation and analysing simple reachability queries on it.

Summary

We have developed a the control of a Lego robot. Computer vision is used to observe the environment and provide autonomous behaviour of the robot. Simulation is used at design time to estimate the necessary parameters of the control and at runtime to check dangerous movements of the robot arm.

Various IoT and model based techniques were implemented in the project, the synergies of these technologies led to a complex IoT application!

The integration of the Lego robot with the controller: using MQTT from Python

In the Lego robot subproject of the MoDeS3 it was important to integrate the sensor information from the Lego sensors, the control and also the logic responsible for the safety. For this purpose, we have implemented an advanced control protocol in Python. This script runs on an embedded Linux distribution, called EV3dev. With this operating system we can utilize the Lego devices connected to the EV3 brick, while have access to all generic Linux packages, like the mosquitto broker.

The simplified overview of depdendecies is depicted on the next picture:

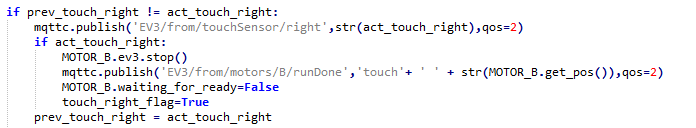

Our logic is able to detect when the motors are overdriven, or the robot is getting to a twisted and dangerous position, and prevents it from further attacking its limits by stopping them. By this protocol other components can control the crane by MQTT messages, or stop it if any other sensor detects something dangerous.

The code snippet below runs in a cycle and if it notices chage in the state of the touch-sensor, sends a message through MQTT (Paho). If needed it also executes some safety routines.

Sensors of the hardware are depicted on the following figures: these sensors provide the information:

The solution is built modularly, each part is responsible for certain movements and sensor information. The control software enables the user to control any of the motors individually, and get back raw sensor data through MQTT. A safety modul is observes the behaviours and available information and intervenes if something goes wrong.

Controlling and ensuring safety of the Lego robot arm: the computer vision challenge

As the Lego robot arm executes a mission, information about the environment is required. Beside executing missions correctly, our goal is to detect any kind of danger caused by the robot. The goal is twofold: the robot has to know when to execute a mission i.e. the object to be moved is at the right place. Second, it has to stop when some dangerous situation happens, for example a human is present near to the robot.

We are building the monitoring infrastructure of the robot arm based on computer vision technologies. OpenCV helps us detecting and tracking the movements of the robot In case of a moving robot, no other moving objects should be there. In addition, computer vision will detect if the object to be transported is in the proper place to handle by the robot.

First time we built only a robot arm with limited functionality. According to the experiences, we have totally rebuilt it.

Rebuilding the robot was a big step forward for the project’s computer vision goals. Now, we are able to detect the orientation and also the movement of the arm, without markers.

The new concept is to put on some Lego element in a combination to form a larger component with distinctive shape and colour. The camera observes the whole loading area from the top, and searches for the elements.

Using the same camera frames, we can detect the orientation of the gripper and find the cargos and the train.

For the gripper we needed a marker and that Lego element which we talked about before. The marker is directly connected to the gripper’s motor, so it is moving with the gripper during the rotation and other movements. The orientation is compared to the arm’s orientation so it won’t change during the movement of the arm, only the rotation influences its settings. The marker is a black circle, therefore we replaced the color detection with circle detection for this case.

Cargo has distinctive color.

In the following picture the output of the various steps of the process is depicted.

In the following we just sketch the working of the detection algorithms. Transforming the picture of the camera to HSV (Hue-Saturation-Value) representation. This will serve as a base representation for further processing. The next step is to decompose the picture according to the information we are looking for. In order to ease the tasks of the further processing, the picture is cut into pieces: The Lego arm, the gripper and also the object to be moved will be in different pieces of the picture.

Detecting the various objects of interest, we need to assess the color and the size of the objects in the picture: this is assessed at the next phase of the processing.

Edge detection algorithms search for the contour of the objects. Pattern matching algorithms try to find rectangles in the picture.

Numerical filters than used to sort the found objects (rectangles) according to their size. From the filtered objects, some special heuristics filter those object which are likely to be the searched object, namely the arm, the gripper and the load to be moved.

The movement of the arm is traced by reducing the problem to finding the moving rectangles in the filtered picture. Computing averages and tracing the middle point of the objects provide quite precise results.

So, as you might see, many algorithms work on the control and safety assurance of the Lego robot arm. Despite its complexity, it works well in practice!