In the last two months we have invested much time to demonstrate model-based development with the help of a mixed-critical model railway system. We wanted to show the newest techniques that can be used to develop highly reliable safety critical systems.

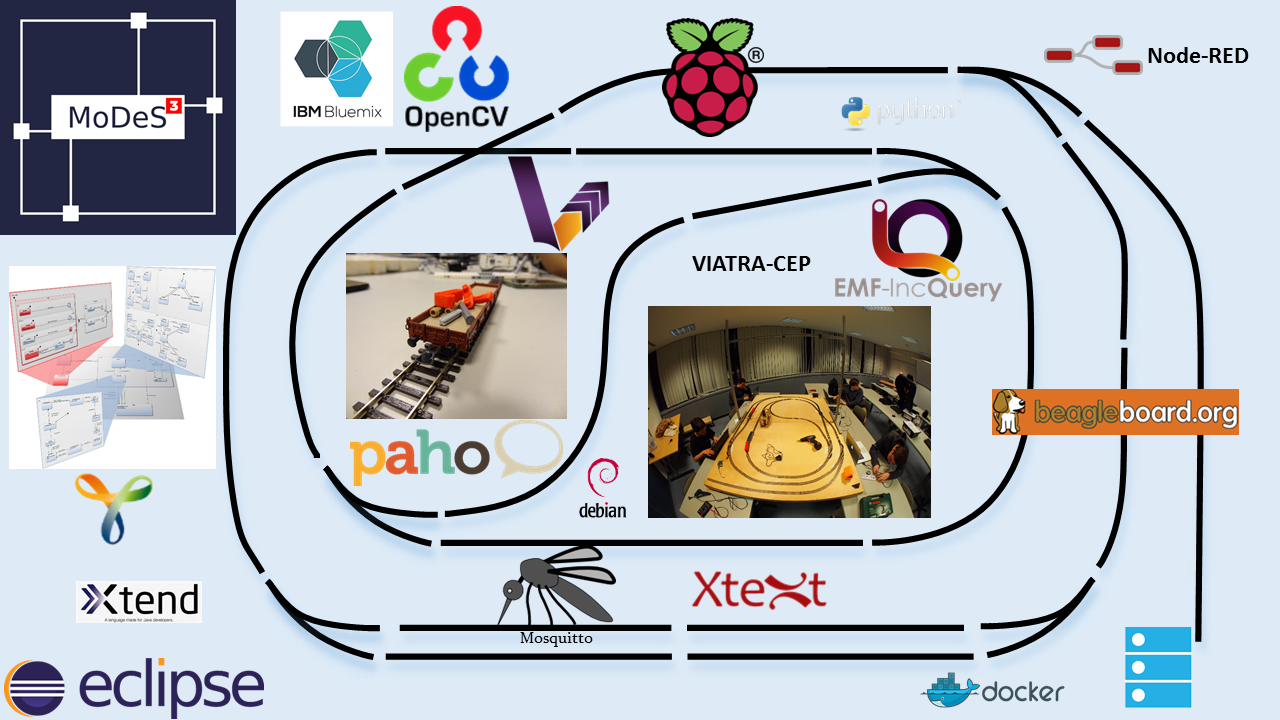

We started from an old hardware architecture, but for this project we have completely redesigned the hardware architecture to meet the new requirements of the envisioned system – we wanted to demonstrate various development, verification, and validation techniques, integrate them, and use a real-time open-source operating system on the controllers. After the architectural plans were made, we’ve decided to use BeagleBone Blacks that’ll run a special Debian based Linux (with a real-time kernel developed by Texas Instruments).

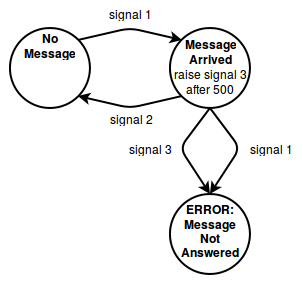

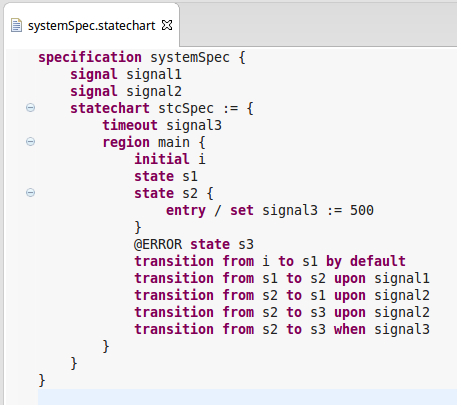

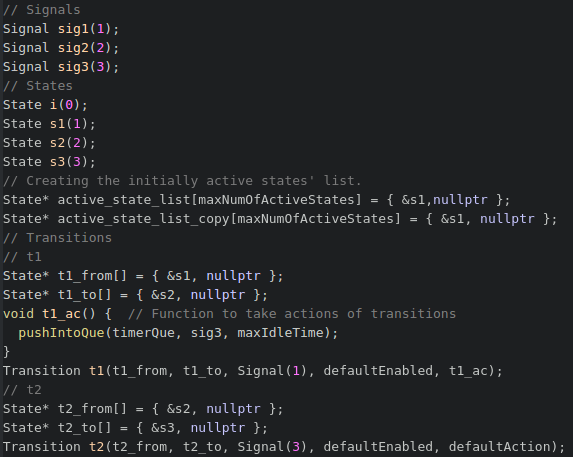

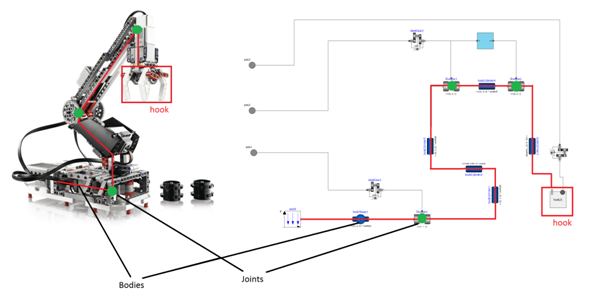

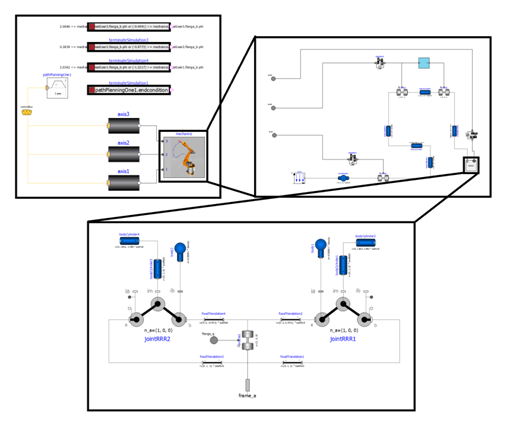

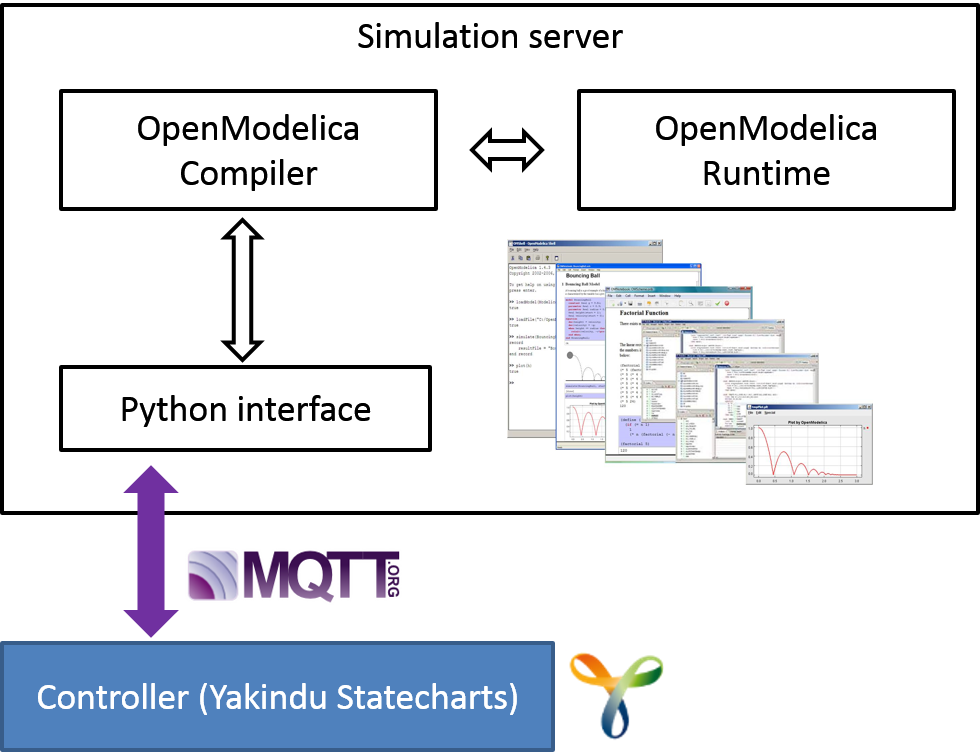

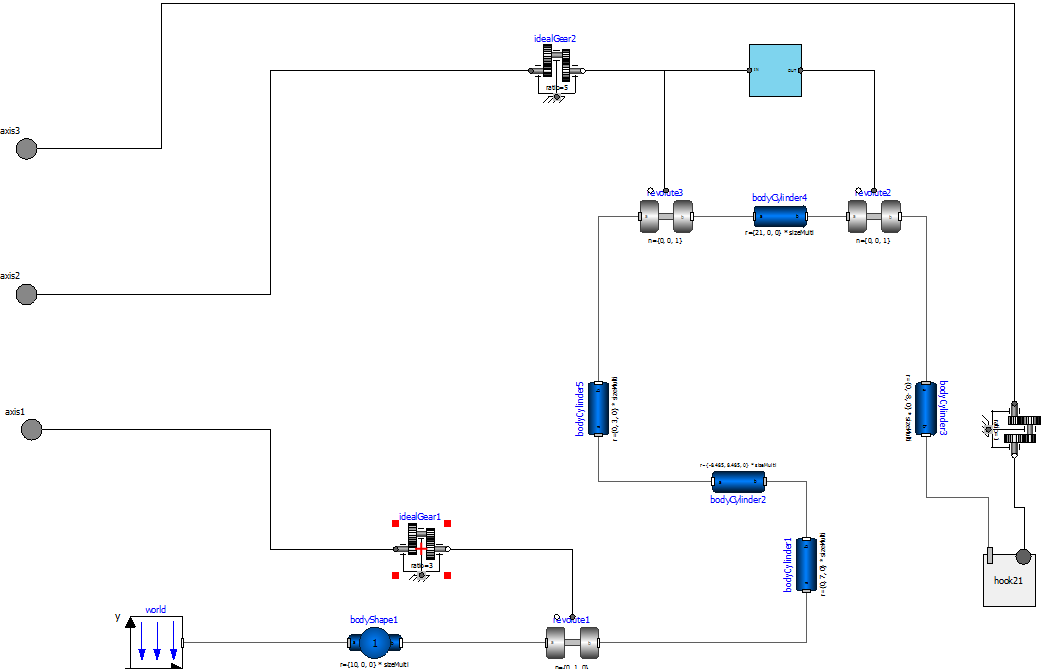

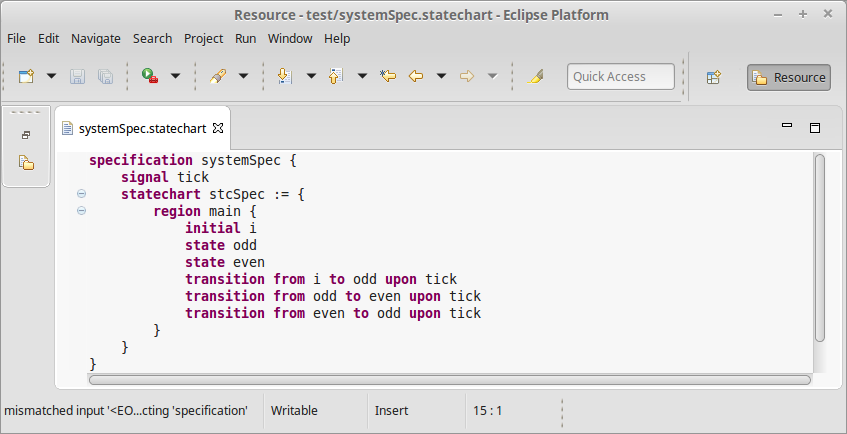

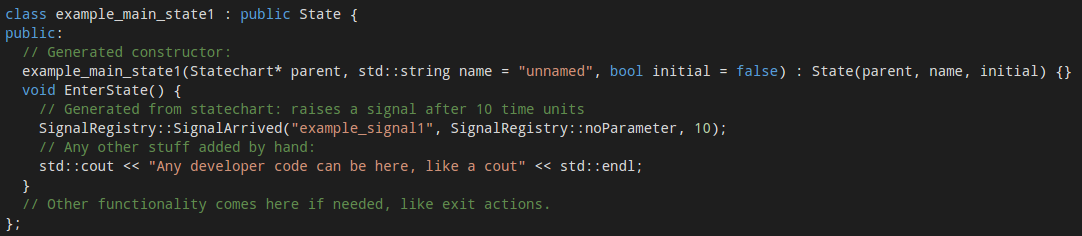

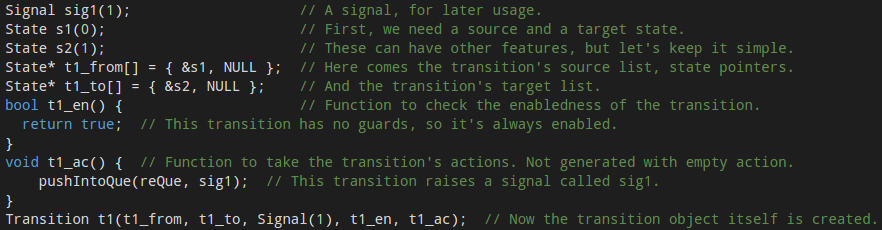

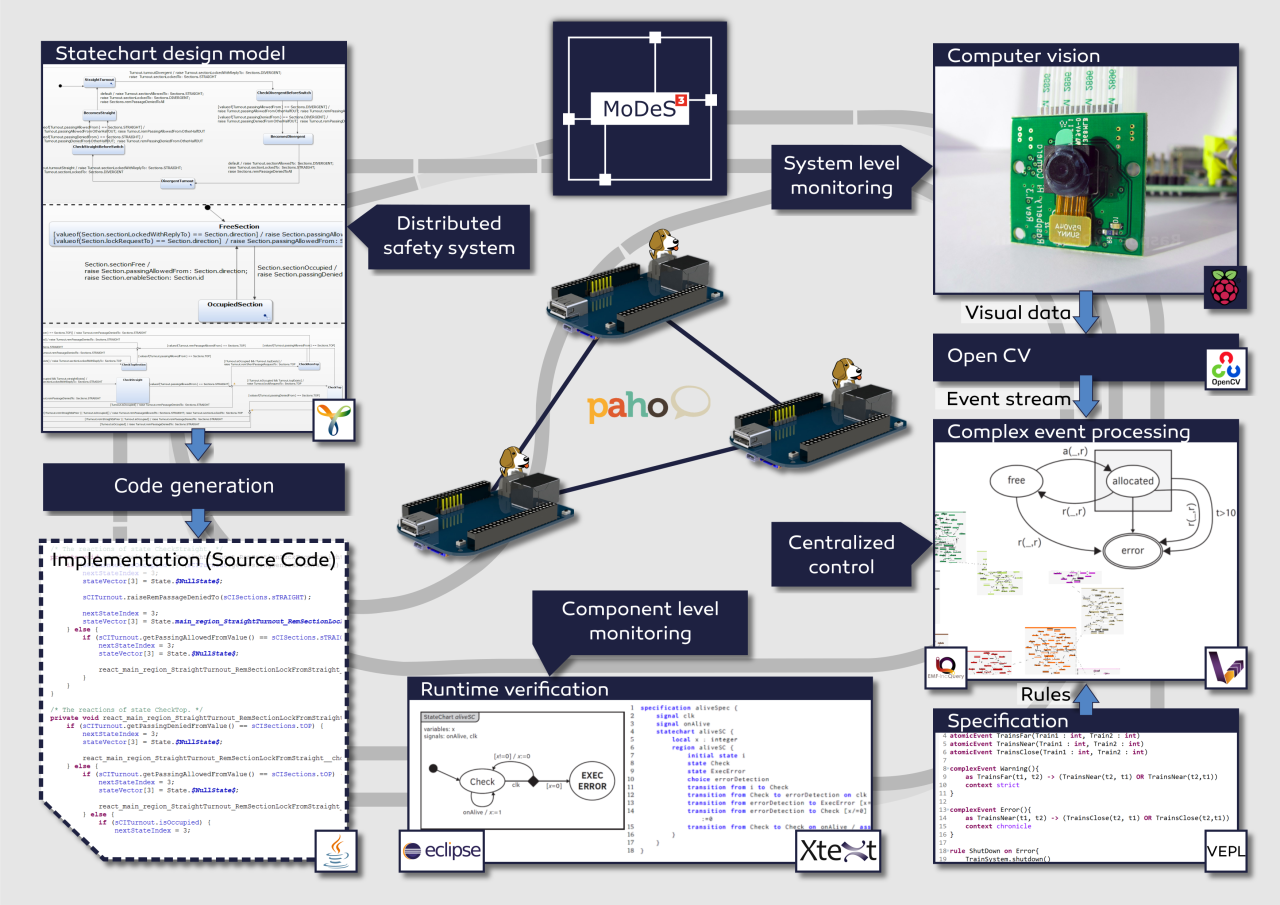

The development of safety critical systems starts by clarifying the requirements – in this case our goal is avoiding crashes and accidents. Then comes the more difficult part: model-based development of the distributed logic. This allows verification and code generation based on the model, resulting in a more reliable system. This was conducted in the open-source Yakindu Statecharts Tool. Validation and verification rules were utilized to find design problems at the early stages of development. The validation rules were defined with the help of the open-source IncQuery engine (which is used for graph-queries), while the transformation rules were implemented in the VIATRA framework. The source code of the distributed logic was generated automatically from the design models.

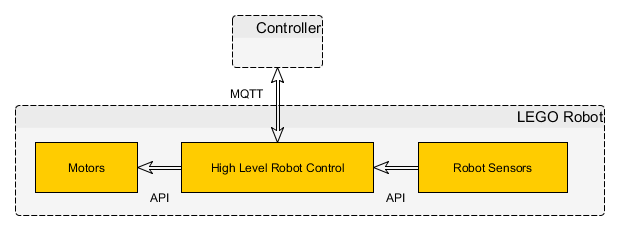

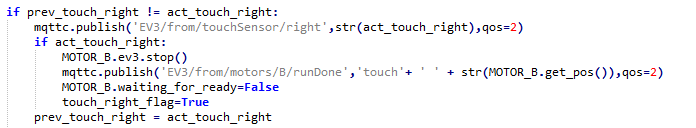

MQTT is used as a communication platform, Mosquitto and Paho provided the technology to implement the communication infrastructure of the system. These IoT technologies proved their applicability in time critical systems. Our experience was that they are not only easy to use, but these technologies provide realiability and the performance needed for our project.

Conceptually, we had two different levels of monitoring. One level runs on the programmable real-time unit of the BeagleBone hardware, and another one runs at the system level.

We have developed a language to support the runtime verification of the local components. A special statechart language is provided based on Xtext from which we can generate embedded monitors.

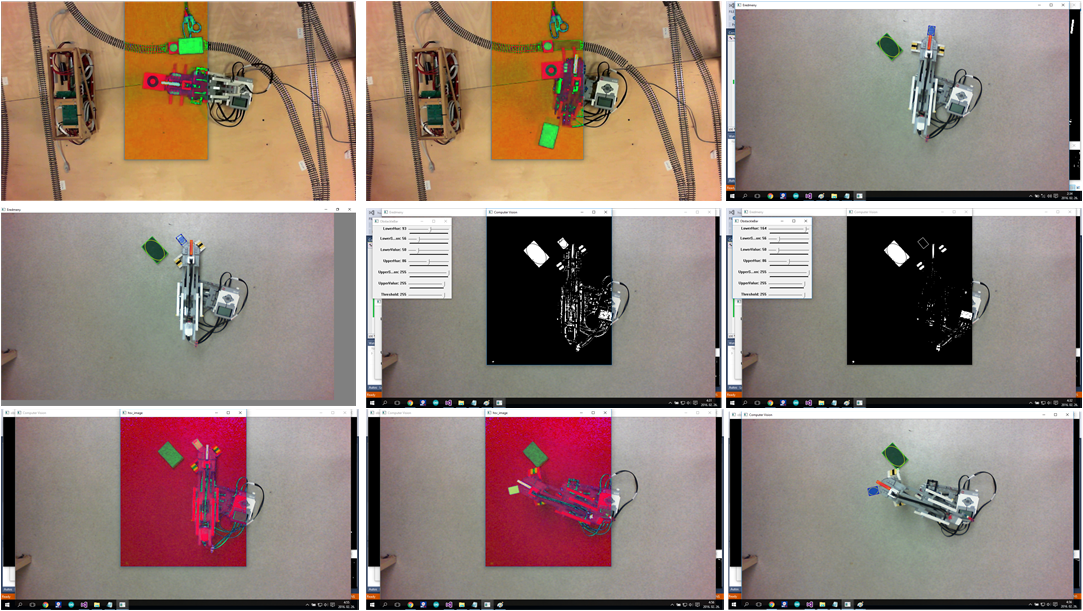

On the top level, there is a computer vision based monitoring system. The video is processed real-time by OpenCV, which is an open-source library of computer vision techniques. An open-source comlex-event processing engine maintains an abstract view represented in a live model. IncQuery patterns are used to define the critical event in the system and VIATRA-CEP processes the event stream. Our developments will hopefully appear in the future in the official version of VIATRA-CEP.

During this challenge one of the main difficulties regarding the complex-event processing part was creating a program module which can be later integrated to an open-source project.

The not technology related complexity was to design and implement a mathematical formalism which is appropiate for using in Complex Event Processing Frameworks.

Using the open-source IoT technologies of Eclipse was challenging at first but since they are well-documented and intuitive to use they were simple to use after all. Integrating the complex-event processing engine to other open-source communication technologies was not difficult.

We have tested the system in the IBM Bluemix cloud in Docker containers and also on the real hardware. Node-RED flows and the generated statechart codes have been deployed into IBM Bluemix. Each turnout has its own statechart and they run separately, connected through the Node-RED flow, to make the communication easier and to use cutting-edge Internet of Things technolgy!

The high-level overview of the system is depicted on the next picture.

During the development we have faced theoretical challenges such as the new formalism for complex-event processing, the development of the distributed safety-logic or the verification of the components. On the other side, we have solved many technology related problems, integration tasks or more involved problems.

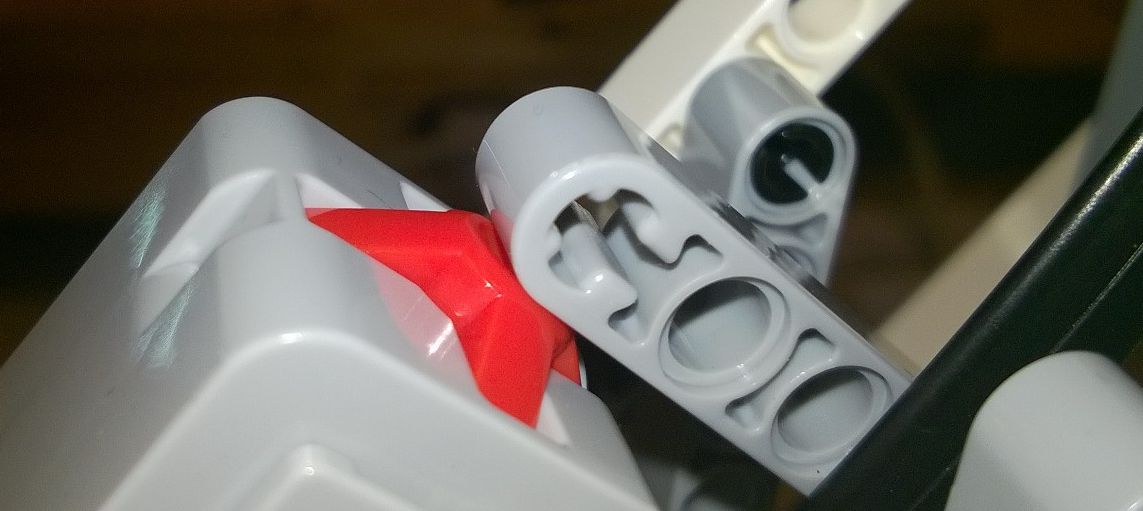

Besides, we have shot some videos as well: Model Railway Track Side Perspective, Model Railway Onboard Footage #1 and #2, Restlessly building the hardware. The videos are available at our blog.

Here is a picture for a quick overview of the technologies we used.

During the developments, we have also contributed to the open-source world! Besides, all modules we implemented are open source and available in our GitHub repository.

For more details, read our blog!