Simulation is an important means in complex cyber-physical and IoT applications as it can provide:

- analysis at design time of the development

- prediction at runtime

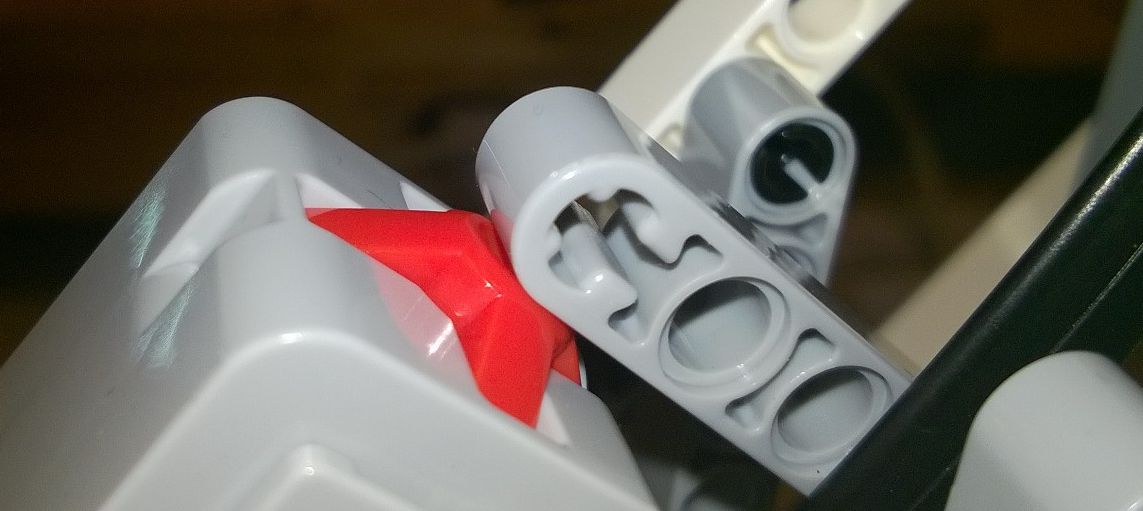

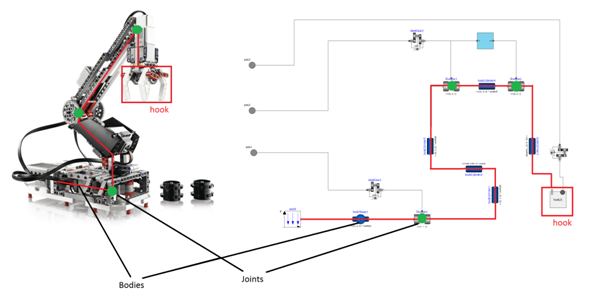

We have developed the physican model of the robot arm (crane) in the open-source OpenModelica framework.

It defines itself with the following goal: "The goal with the OpenModelica effort is to create a comprehensive Open Source Modelica modeling, compilation and simulation environment based on free software distributed in binary and source code form for research, teaching, and industrial usage.“

OpenModelica is complex, there are many built-in functions and libraries, and it is also able to compute the complex behaviours of hybrid systems. Our robot arm is inherently hybrid as the controller has discrete modes while the physical system is continuous.

We have built the model of the robot arm.

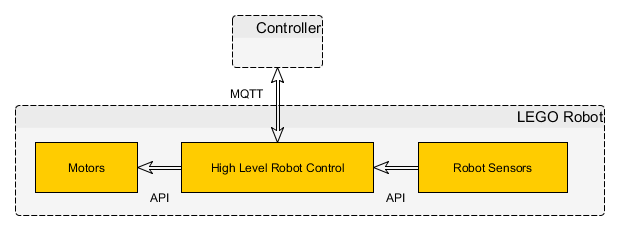

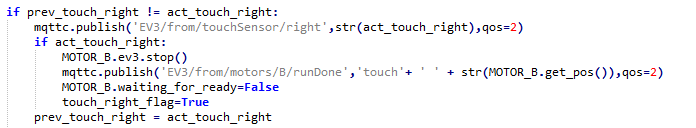

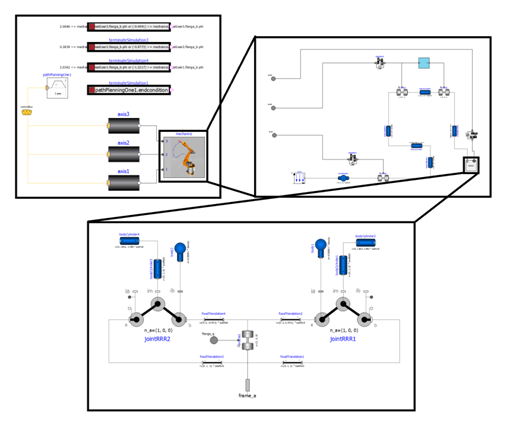

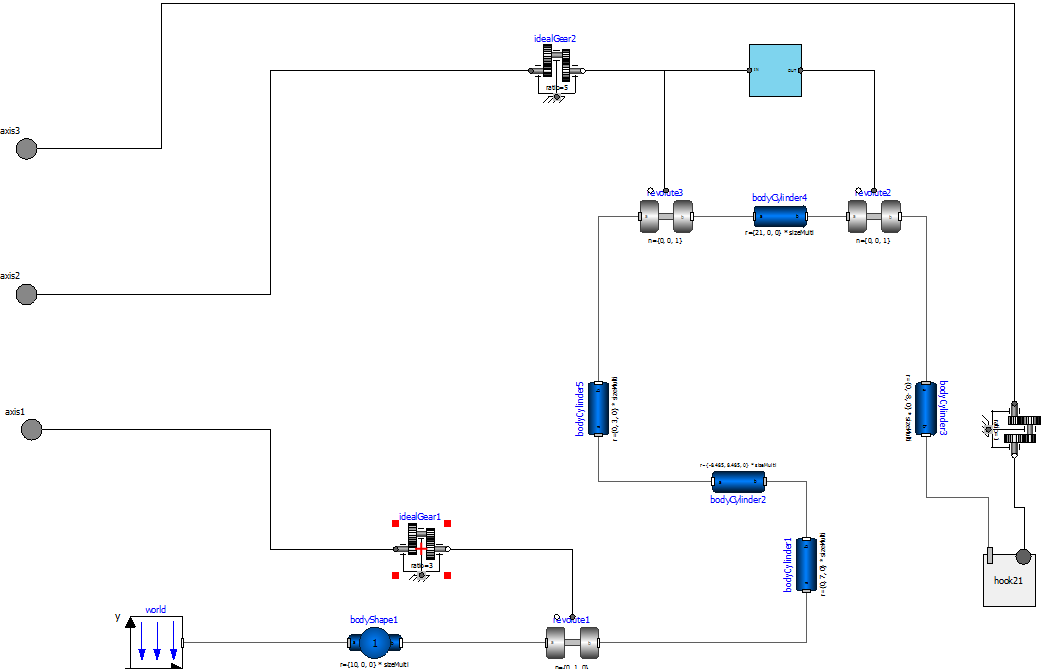

By decomposing the physical model into smaller pieces, we can get the hugh level modelica model, depicted in the following figure.

This hierarchical model can be further refined according to the parts of the physical component.

We have used many different components of the library, we have parameterized them according to the measurements, and what we got is quite close to reality. We have done measurements and the model could predict the real physics with only 2-3% of error. This is a quite good result 🙂

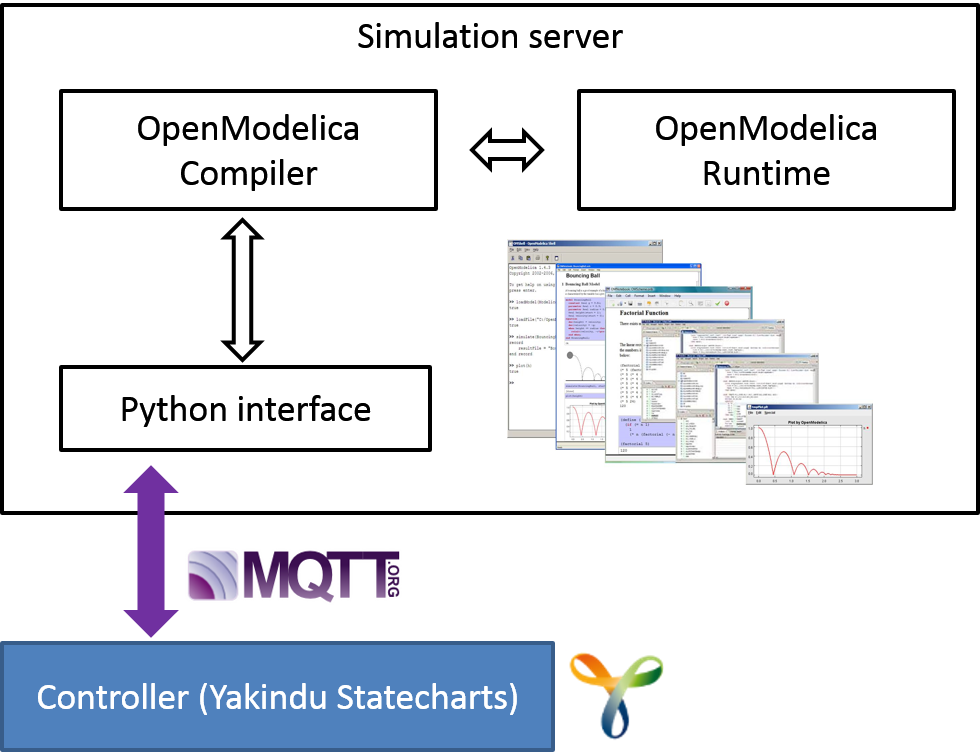

The simulaton is provided to the controller as a service running on a separate linux virtual machine. A virtual controller is developed which compiles the uploaded modelica files with the given parameters and computes the simulation. The results can be showed by the web server of this virtual machine or the results can be sent back to the controller.

the overview of the architecture is depicted on the next figure.

This way the simulation at design and also at runtime is available through a simple interface!

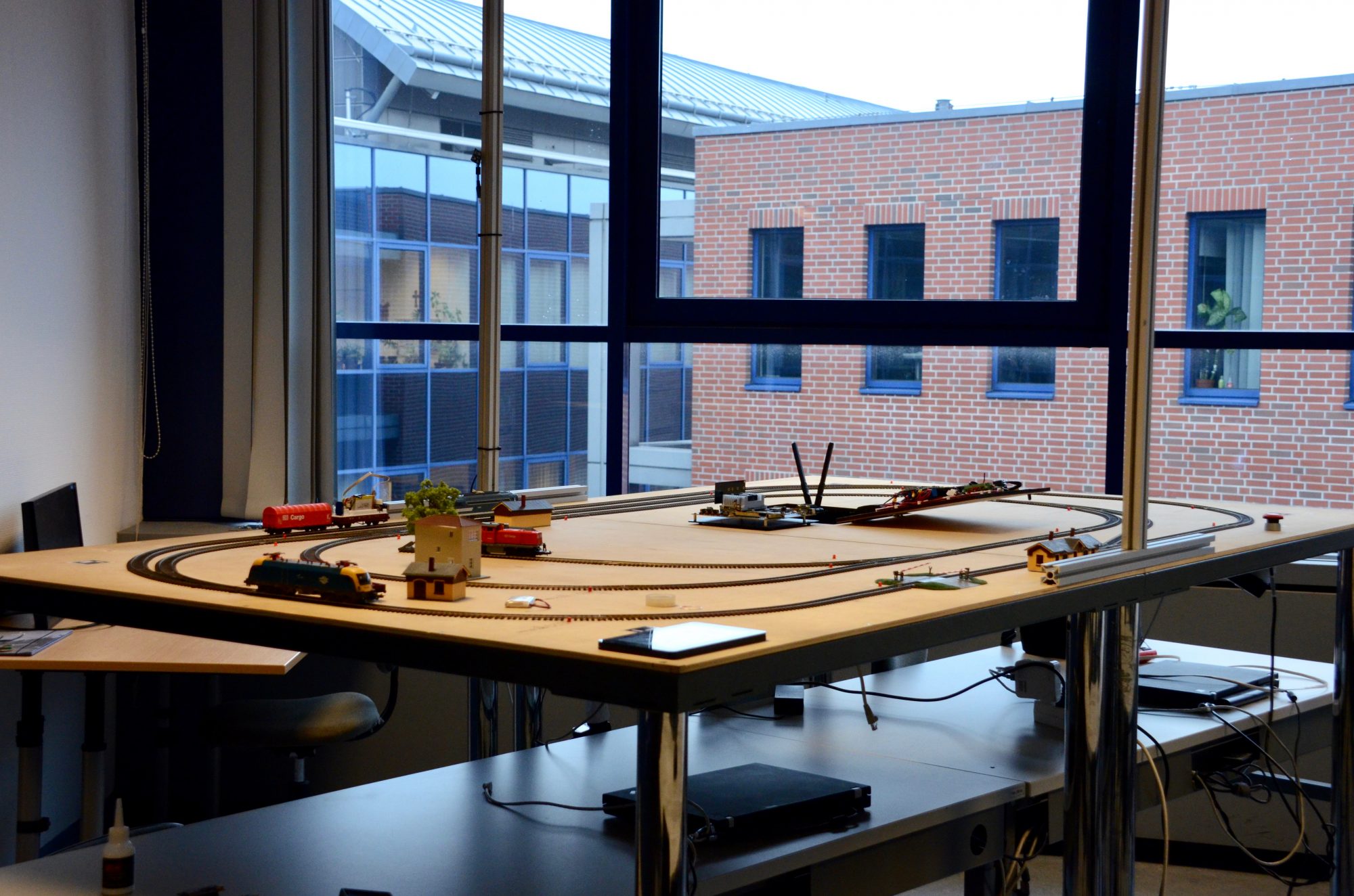

And now let’s show a simple simulation scenario! The model of the next picture is being simulated.

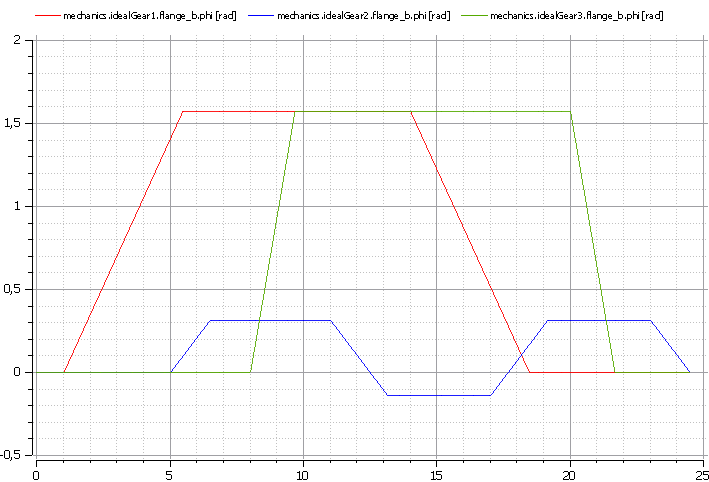

The angle of the three motors in the function of time is depicted on the next picture.

As it can be seen, the three motors can work independently with different speed! Note that this is just a simple example where only some parameters are examined. However, the tool is able to evaluate more complex parameters and movements of the system.

Simulation is a useful feature both at design and also at runtime, various questions regarding the path of the robot arm then can be answered!